Update, June 25, 2024: This blog post series is now also available as a book called Fundamentals of DevOps and Software Delivery: A hands-on guide to deploying and managing production software, published by O’Reilly Media!

This is Part 1 of the Fundamentals of DevOps and Software Delivery series. In the introduction to the blog post series, you heard about how DevOps consists of dozens of different concepts, but it almost always starts with just one question:

I wrote an app. Now what?

You and your team have spent months putting together an app. You picked a programming language and a framework, you designed the UI, you implemented the backend, and finally, things seem to be working just the way you want. It’s time to expose the app to real users.

How, exactly, do you do that?

There are so many questions to figure out here. Should you use AWS or Azure or Google Cloud? (And what about Heroku, Vercel, and Netlify?) Do you need one server or multiple servers? (And what the hell is serverless?) Do you need to use Docker? (And what the hell is Kubernetes?) What is a VPC and why do you need one? (And what about VPN and SSH?) How do you get a custom domain name working? (And what’s with these scary TLS errors?) What’s the right way to set up your database? (And are the backups even working)? Why did that app crash? (And how the hell do you debug it)? What do all these errors mean? Why does nothing seem to be working? Why is this so hard???

OK, easy now. Take a deep breath. If you’re new to software delivery—you’ve worked as app developer your whole career, or you’re just starting out in operations—it can be overwhelming, and you can get stuck in analysis paralysis right out of the gate. This blog post series is here to help. I will walk you through each of these questions—and many others you didn’t even think to ask—and help you figure out the answers, step-by-step.

The first part of the blog post is a short primer on DevOps. Don’t worry, this isn’t another high-level document about culture and values: that stuff matters, but you’ve probably read it a million times on Wikipedia already. Instead, what you’ll find here are the startling real-world numbers on why DevOps matters and a step-by-step walkthrough of the concrete architectures and software delivery processes used in modern DevOps, and how they evolve through the various stages of a company.

The second part of the blog post will have you diving into these architectures and processes, starting with the very first step: deploying an app. There are quite a few different options for deploying apps, so this post will walk you through the most common ones. Along the way, you’ll work through examples where you deploy the same app multiple different ways: on your own computer, on fly.io (a popular Platform as a Service provider), and on AWS (a popular Infrastructure as a Service provider). This will allow you to see how the different options compare across a variety of dimensions (e.g., ease-of-use, security, control, debugging, etc.), so that you can pick the right tool for your use cases.

Let’s get started!

A Primer on DevOps

The vast majority of developers have never had the opportunity to see what world-class software delivery looks like first hand. If you’re one of them, you’ll be astonished by the gap between companies with world-class software delivery processes and everyone else. It’s not a 1.1x or 1.5x improvement: it’s 10x or 100x—or more.

Table 2 shows the four key DevOps Research and Assessment (DORA) metrics, which are a quick way to assess the performance of a software development team, and the difference between elite performers and low performers at these metrics, based on data from the 2023 State of DevOps Report:

| Metric | Description | Elite vs low performers[1] |

|---|---|---|

Deployment frequency | How often you deploy to production | ~10-200x more often |

Lead time | How long it takes a change to go from committed to deployed | ~10-200x faster |

Change failure rate | How often deployments cause failures that need immediate remediation | ~13x lower |

Recovery time | How long it takes to recover from a failed deployment | ~700-4000x faster |

These are staggering differences. To put that into perspective, we’re talking the difference between:

-

Deploying once per month vs many times per day.

-

Deployment processes that take 36 hours vs 5 minutes.

-

Two out of three deployments causing problems vs one out of twenty.

-

Outages that last 24 hours vs 2 minutes.

It’s almost a meme that developers who leave companies with world-class software delivery processes, such as Google, Facebook, or Amazon, complain bitterly about how much they miss the infrastructure and tooling. That’s because they are used to a world where:

-

They can deploy any time they want, and it’s common to deploy thousands of times per day.

-

Deployments can happen in minutes, and they are 100% automated.

-

Problems can be detected in seconds, often before there is any user-visible impact.

-

Outages can be resolved in minutes, sometimes automatically, without having to page anyone.

What do the equivalent numbers look like at your organization?

If you’re not even in the ballpark, don’t fret. The first thing you need to know is that it’s possible to achieve these results. In fact, there are many ways to achieve these sorts of results, and each of those world-class companies does it a bit differently. That said, they also have a lot in common, and the DevOps movement is an attempt to capture some of these best practices.

Where DevOps Came From

In the not-so-distant past, if you wanted to build a software company, you also needed to manage a lot of hardware. You would set up cabinets and racks, load them up with servers, hook up wiring, install cooling, build redundant power systems, and so on. It made sense to have one team, typically called Developers (“Devs”), dedicated to writing the software, and a separate team, typically called Operations (“Ops”), dedicated to managing this hardware.

The typical Dev team would build an application and "toss it over the wall" to the Ops team. It was then up to Ops to figure out the rest of software delivery: that is, to figure out how to deploy, run, and maintain that application. Most of this was done manually. In part, that was unavoidable, because much of the work had to do with physically hooking up hardware (e.g., racking servers, hooking up network cables). But even the work Ops did in software, such as installing the application and its dependencies, was often done by manually executing commands on a server.

This works well for a while, but as the company grows, you eventually run into problems: releases are manual, slow, and error-prone; this leads to frequent outages and downtime; the Ops team, tired from their pagers going off at 3 a.m. after every release, reduce the release cadence even further; but now each release is even bigger, and that only leads to more problems; teams begin blaming one another; silos form; the company grinds to a halt.

Nowadays, a profound shift is taking place. Instead of managing their own datacenters, many companies are moving to the cloud, taking advantage of services such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). Instead of investing heavily in hardware, many Ops teams are spending all their time working on software, using tools such as OpenTofu, Ansible, Docker, and Kubernetes. Instead of racking servers and plugging in network cables, many sysadmins are writing code.

As a result, both Dev and Ops teams spend most of their time working on software, and the distinction between the two teams is blurring. It might still make sense to have a separate Dev team responsible for the application code and an Ops team responsible for the operational code, but it’s clear that Dev and Ops need to work more closely together. This is where the DevOps movement comes from.

DevOps isn’t the name of a team or a job title or a particular technology (though you’ll see it used for all three). Instead, it’s a set of processes, ideas, and techniques. Everyone has a slightly different definition of DevOps, but for this blog post series, I’m going to define DevOps as a methodology with the following goal:

The goal of DevOps is to make software delivery vastly more efficient.

The move to the cloud and the DevOps mindset represent a profound shift across all aspects of software delivery, as shown in Table 3:

| Before | After | |

|---|---|---|

Teams | Devs write code, "toss it over the wall" to Ops | Devs & Ops work together on cross-functional teams |

Servers | Dedicated physical servers | Elastic virtual servers |

Connectivity | Static IPs | Dynamic IPs, service discovery |

Security | Physical, strong perimeter, high trust interior | Virtual, end-to-end, zero trust |

Infrastructure provisioning | Manual | Infrastructure as Code (IaC) tools |

Server configuration | Manual | Configuration management tools |

Testing | Manual | Automated testing |

Deployments | Manual | Automated |

Change process | Change request tickets | Self-service |

Deployment cadence | Weeks or months | Many times per day |

Change cadence | Weeks or months | Minutes |

You heard in the Preface how LinkedIn’s DevOps transformation saved the company, but they are not the only ones. Nordstrom found that after applying DevOps practices to its organization, it was able to increase the number of features it delivered per month by 100%, reduce defects by 50%, reduce lead times (the time from coming up with an idea to running code in production) by 60%, and reduce the number of production incidents by 60% to 90%. After HP’s LaserJet Firmware division began using DevOps practices, the amount of time its developers spent on developing new features went from 5% to 40%, and overall development costs were reduced by 40%. Etsy used DevOps practices to go from stressful, infrequent deployments that caused numerous outages to deploying 25 to 50 times per day, with far fewer outages.[2]

So how does DevOps produce these sorts of results? As mentioned in the Preface, DevOps isn’t any one thing, but rather, it consists of dozens of different tools and techniques, all of which have to be adapted to your company’s particular needs. So if you want to understand DevOps at a high level, you need to understand how the architecture and processes evolve at a typical company.

The Evolution of DevOps

While writing my first book, Hello, Startup, I interviewed early employees from some of the most successful companies of the last 20 years, including Google, Facebook, LinkedIn, Twitter, GitHub, Stripe, Instagram, Pinterest, and many others. One thing that struck me is that the architecture and software delivery processes at just about every software company evolve along very similar lines. There are of course individual differences here and there, but there are far more similarities than differences, and the broad shape of the evolution tends to repeat again and again.

In this section, I’m going to share this evolutionary process. I’ve broken it down into three high-level stages, each of which consists of three steps. This is, of course, a vast simplification: in reality, this is more of a continuum than discrete steps, and the steps don’t always happen in the exact order listed here. Nevertheless, I hope this is a helpful mental model of how DevOps typically evolves within a company.

|

Lossy compression

Think of these stages as a compressed guide to the typical way architecture and software delivery processes evolve in a company, but be aware that, in the effort to compress this information, some of the variation inevitably gets lost. |

If you’re new to DevOps and software delivery, you may be unfamiliar with some of the terms used here. Don’t panic. The idea here is to start with a top-down overview—a bit like a high level map—to help you understand what the various ingredients are and how they fit together. As you go through this blog post series, you’ll zoom in on each of these ingredients, study it in detail, and get to try most of them out with real examples. You can then zoom back out and revisit this high-level map at any time to see the big picture, and get your bearings again.

Let’s get started with stage 1.

Stage 1

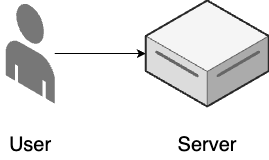

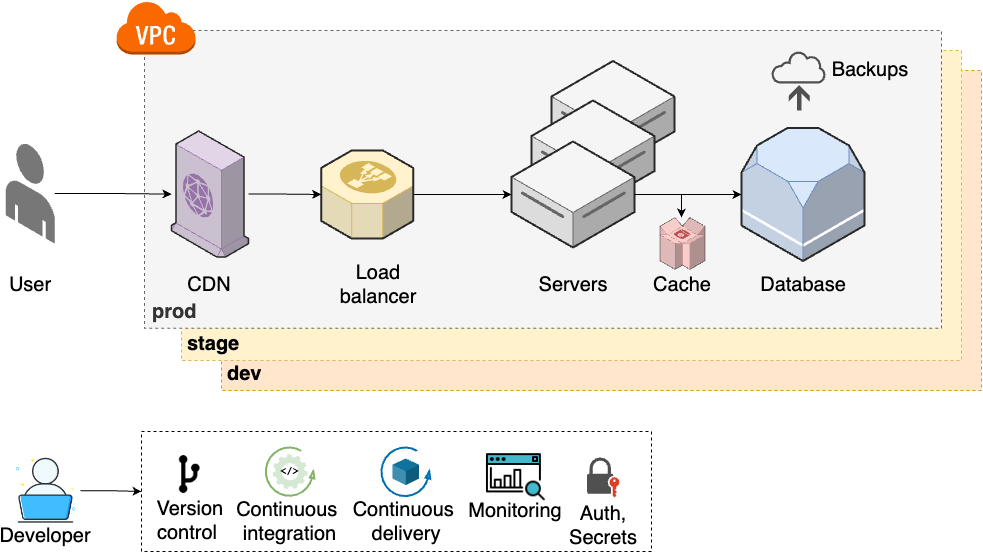

As shown Figure 1, stage 1 is where most software projects start, including new startups, new initiatives at existing companies, and most side projects:

- Single server

-

All of your application code runs on a single server (you’ll see an example of this later in this blog post).

- ClickOps

-

You manage all of your infrastructure and deployments manually.

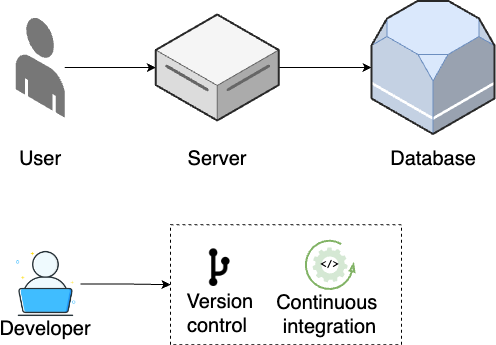

As traffic grows, you move on to step 2, shown in Figure 2:

- Standalone database

-

As your database increasingly becomes the bottleneck, you break it out onto a separate server (Part 9 [coming soon]).

- Version control

-

As your team grows, you use a version control system to collaborate on your code and track all changes (Part 4).

- Continuous integration

-

To reduce bugs and outages, you set up automated tests (Part 4) and continuous integration (Part 5).

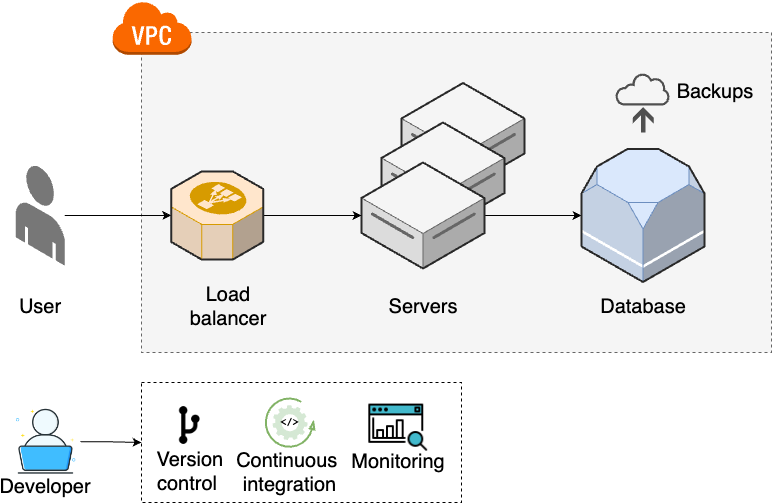

As traffic continues to grow, you move on to step 3, shown in Figure 3:

- Multiple servers

-

As traffic increases further, a single server is no longer enough, so you run your app across multiple servers (Part 3).

- Load balancing

-

You distribute traffic across the servers using a load balancer (Part 3).

- Networking

-

To protect your servers, you put them into private networks (Part 7 [coming soon]).

- Data management

-

You set up scheduled backups and schema migrations for your data stores (Part 9 [coming soon]).

- Monitoring

-

To get better visibility into your systems, you set up basic monitoring (Part 10 [coming soon]).

Most software projects never need to make it past stage 1. If you’re one of them, don’t fret: this is actually a good thing. Stage 1 is simple. It’s fast to learn and easy to set up. It’s fun to work with. If you’re forced into the subsequent stages, it’s because you’re facing new problems that require more complex architectures and processes to solve, and this additional complexity has a considerable cost. If you aren’t facing those problems, then you can, and should, avoid that cost.

That said, if your company or user base keeps growing, you may have to move on to stage 2.

Stage 2

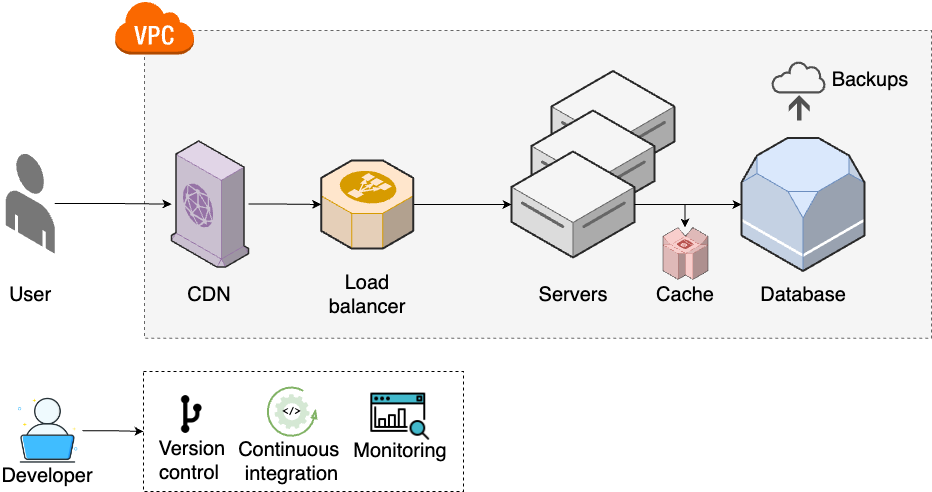

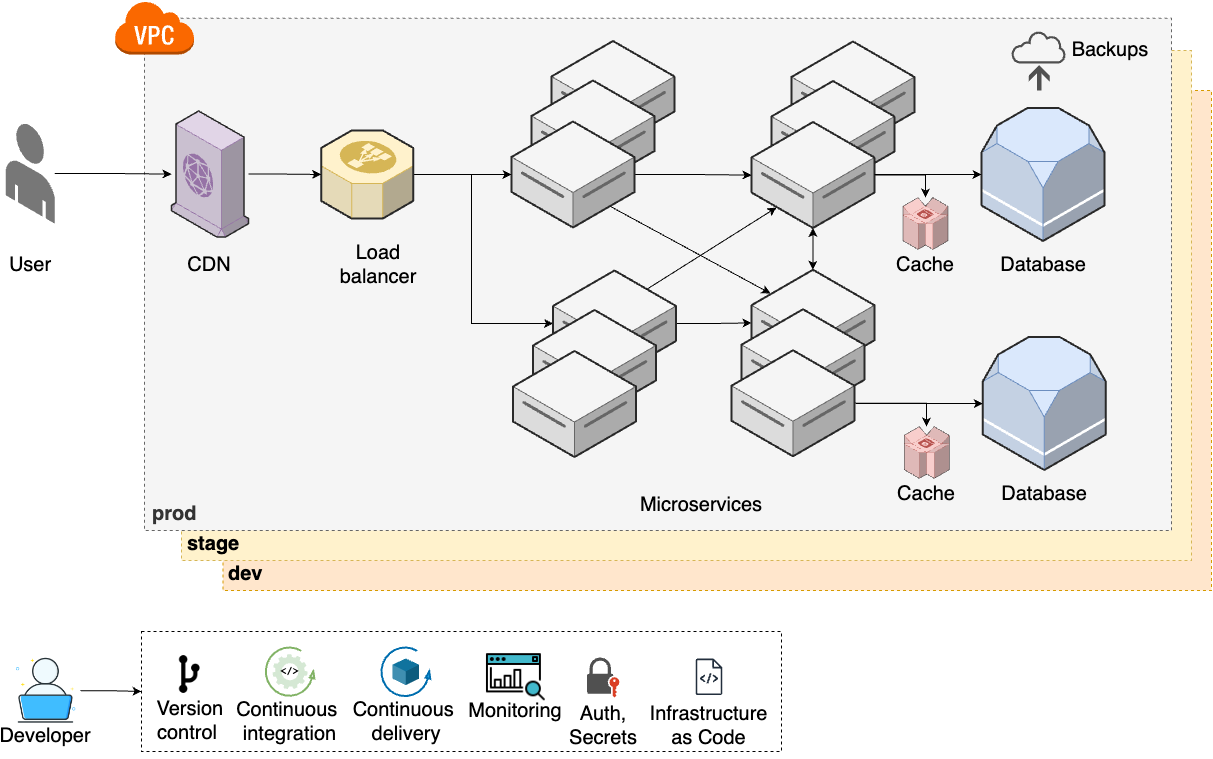

The second stage of evolution typically applies to larger, more established companies and software that has larger user bases and more complexities. It starts with step 4, shown in Figure 4:

- Caching for data stores

-

Your database continues to be a bottleneck, so you add read replicas and caches (Part 9 [coming soon]).

- Caching for static content

-

As traffic continues to grow, you add content distribution network (CDN) to cache content that doesn’t change often (Part 7 [coming soon]).

At this point, your team size is often the real problem, so you have to move onto step 5, shown in Figure 5:

- Multiple environments

-

To help teams do better testing, you set up multiple environments (e.g., dev, stage, prod), each of which has a full copy of your infrastructure (Part 6).

- Continuous delivery

-

To make deployments faster and more reliable, you set up continuous delivery (Part 5).

- Authentication and secrets

-

To keep all the new environments secure, you lock down authentication, authorization, and secrets management (Part 8 [coming soon]).

As your teams keep growing, to be able to keep moving quickly, you will need to update your architecture and processes to step 6, as shown in Figure 6:

- Microservices

-

To allow teams to work more independently, you break up your monolith into multiple microservices, usually each with its own data stores and caches (Part 6).

- Infrastructure as code

-

It’s hard to maintain so many environments manually, so you start to manage your infrastructure as code

Stage 2 represents a significant step up in terms of complexity: your architecture has more moving parts, your processes are more complicated, and you most likely need a dedicated infrastructure team to manage all of this. For a small percentage of companies, even this isn’t enough, and you are forced to move on to stage 3.

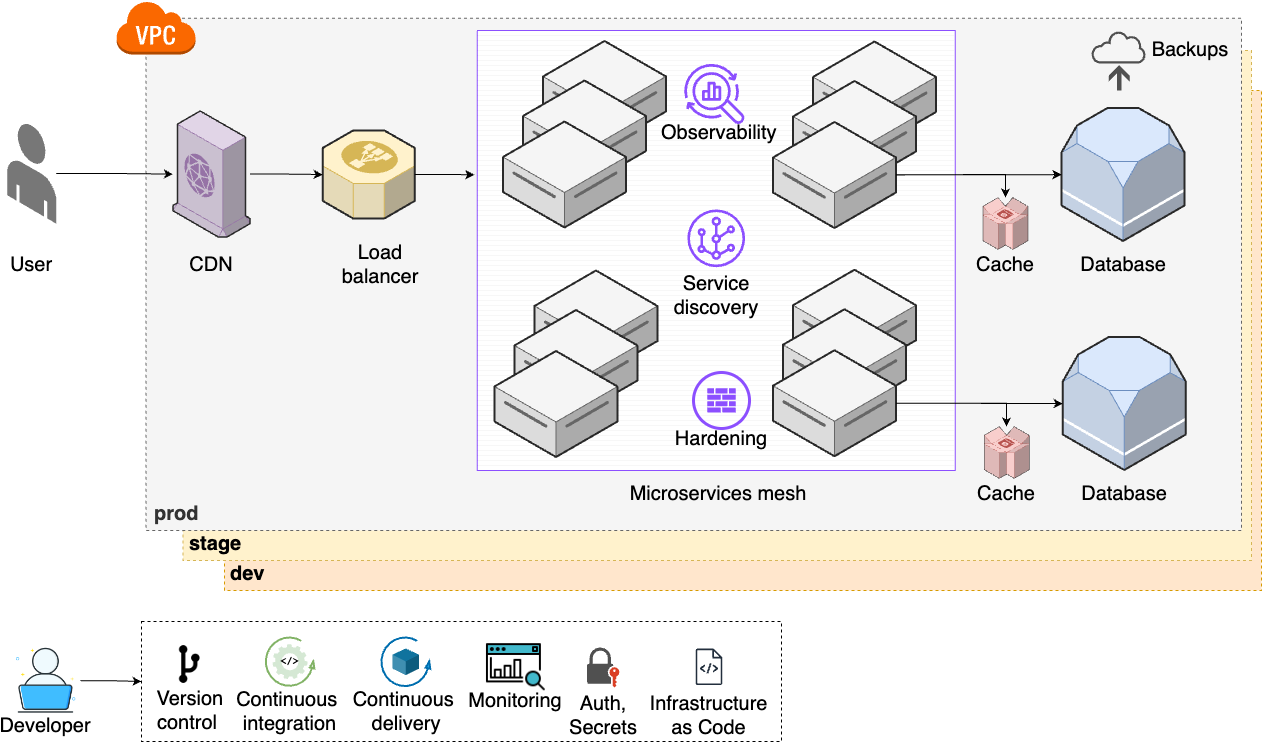

Stage 3

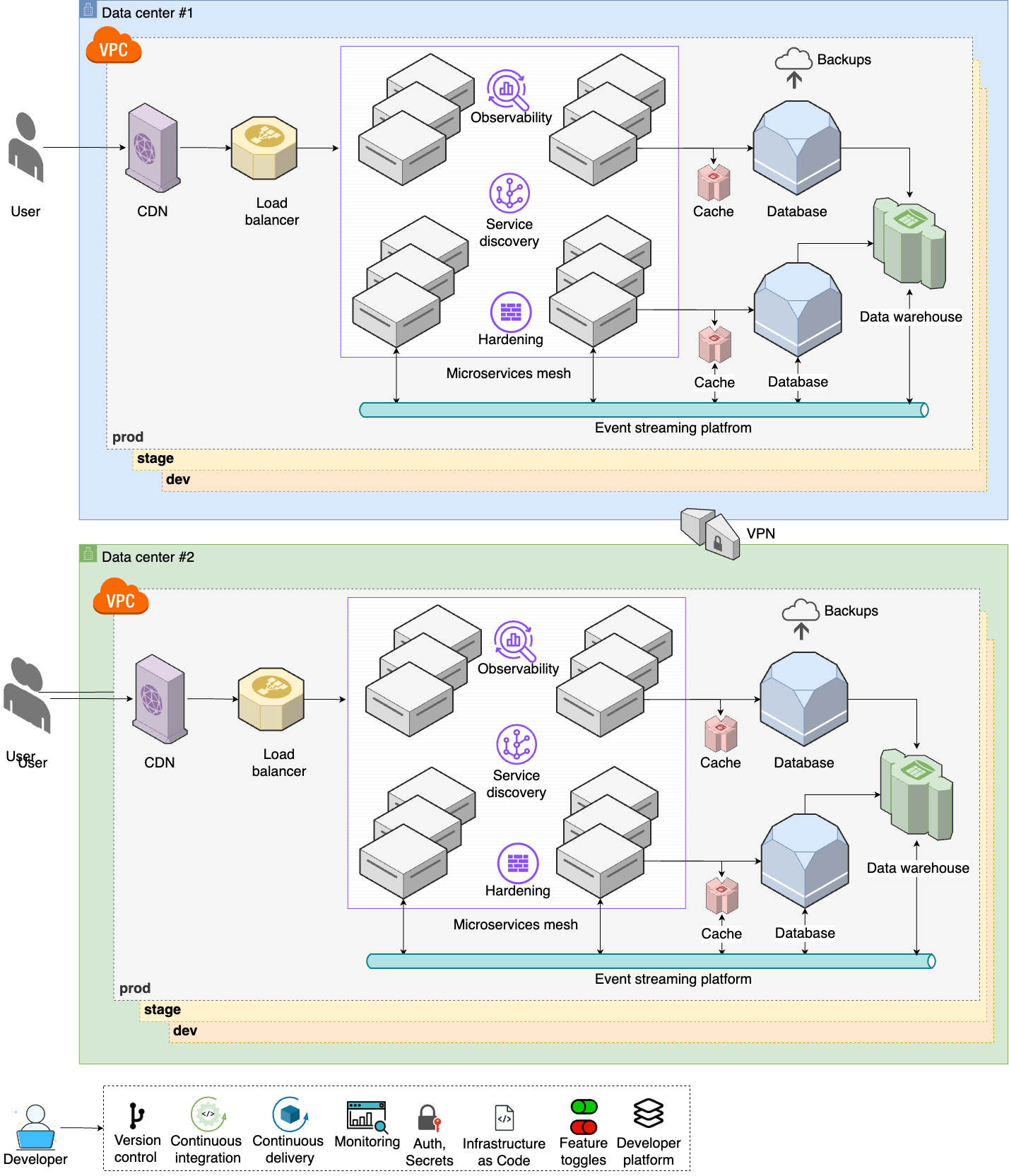

The third stage of evolution only applies to large enterprises with massive user bases. It starts with step 7, shown in Figure 7:

- Observability

-

To get even more visibility into your systems, you set up tracing and observability tools (Part 10 [coming soon]).

- Service discovery

-

As the number of microservices increases, you set up a service discovery system to help them dynamically and automatically communicate with each other (Part 7 [coming soon]).

- Hardening

-

To meet various compliance standards (e.g., NIST, CIS, PCI), you work on server and network hardening (Part 7 [coming soon], Part 8 [coming soon]).

- Microservice mesh

-

To help manage a large number of microservices, you start using service mesh tools, which give you a unified solution for the items above (observability, service, discovery, hardening), as well as load balancing, traffic control, and error handling (Part 7 [coming soon]).

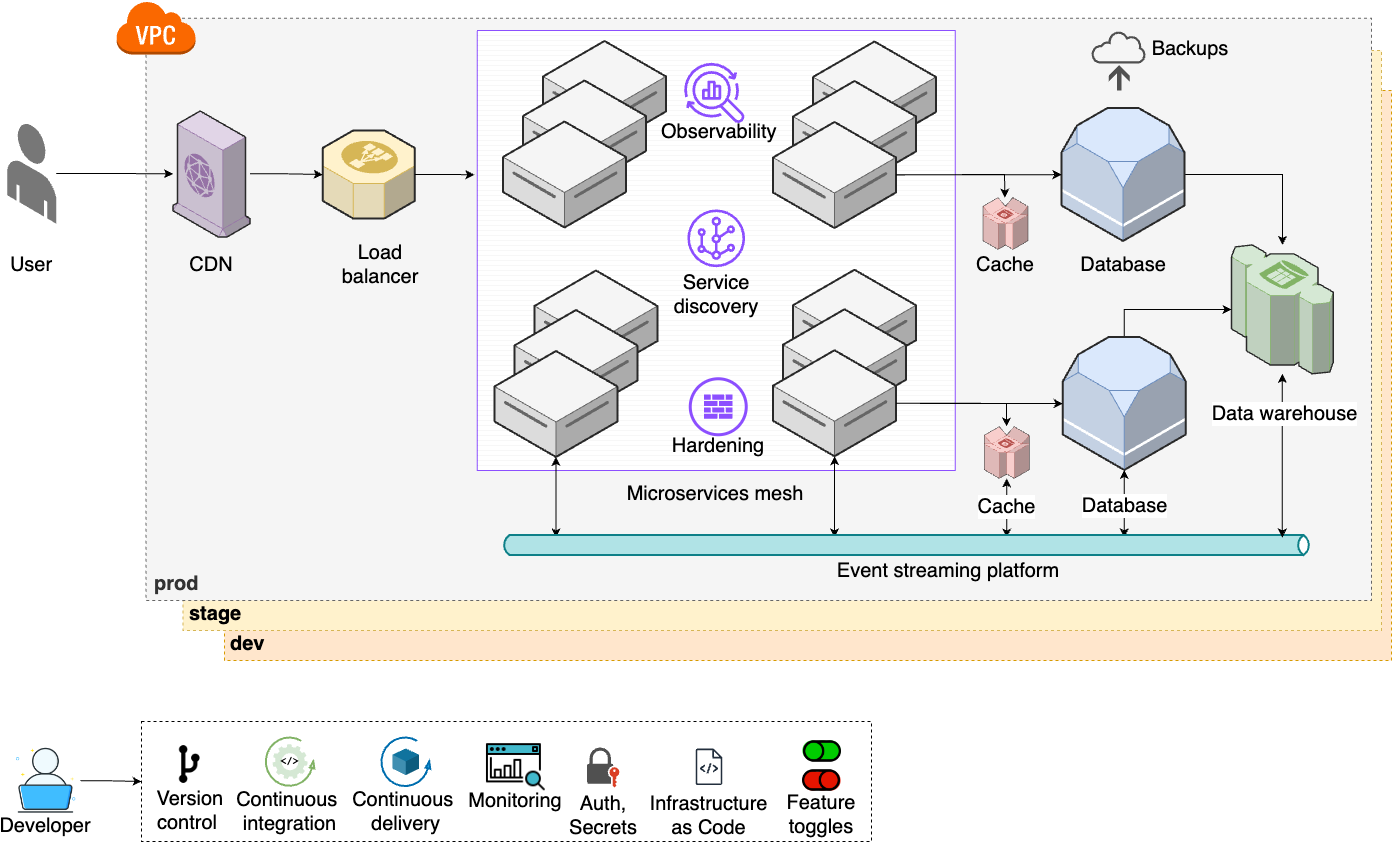

Large companies produce a lot of data, and the need to analyze and leverage this data leads to step 8, shown in Figure 8:

- Analytics tools

-

To be able to process and analyze your company’s data, you set up data warehouses, data lakes, machine learning platforms, etc. (Part 9 [coming soon]).

- Event bus

-

With even more microservice communication and more data to move around, you set up an event bus and move to an event-driven architecture (Part 6, Part 9 [coming soon]).

- Feature toggles

-

To make deployments even faster and more reliable, you set up advanced deployment strategies such feature toggles and canary deployments (Part 5).

Finally, as your user base and employee base keeps growing, you move on to step 9, shown in Figure 9:

- Multiple data centers

-

To handle a global user base, you set up multiple data centers around the world (Part 6).

- Advanced networking

-

You set up advanced networking to connect all your data centers together (Part 7 [coming soon]).

- Internal developer platform

-

To help boost developer productivity, while ensuring all the new accounts are secure, you set up an internal developer platform, account baselines, and an account factory (Part 11 [coming soon]).

The companies in stage 3 face the toughest problems and have to deal with the most complexity: global deployments, thousands of developers, millions of customers. Even the architecture you see in stage 3 is still a simplification compared to what the top 0.1% of companies face, but if that’s where you’re at, you’ll need more than this introductory blog post series!

So now that you’ve seen the various stages and steps, one thing should be abundantly clear: you should expect change. Your architecture and processes will change as your company grows. Be aware of it, plan for it, embrace it. In fact, one of the hallmarks of great software is that it is adaptable: a key characteristic of great code, great architecture, and great processes is that they are easy to change. And the very first change may be adopting DevOps practices in the first place, which is the focus of the next section.

Adopting DevOps Practices

As you read through the three stages, the idea is to match your company to one of the stages, and to pursue the architecture and processes in that stage. What you don’t want to do is to immediately jump to the end, and use the architecture and processes of the largest and most elite companies. Let’s be honest here: your company isn’t Google (unless it is, in which case, congrats, you are an exceptionally rare outlier); you don’t have the same scale, you don’t have the same problems to solve, and therefore, the same solutions won’t be a good fit. In fact, adopting, the solutions for a different stage of company may be actively harmful. Every time I see a 3-person startup running an architecture with 12 microservices, a service mesh, and an event bus, I just shake my head: you’re paying a massive cost to solve problems you don’t have.

|

Key takeaway #1

Adopt the architecture and software delivery processes that are appropriate for your stage of company. |

Even if you are a massive company, you still shouldn’t try to adopt every DevOps practice all at once. One of the most important lessons I’ve learned in my career is that most large software projects fail. Whereas roughly 3 out of 4 small IT projects (less than $1 million) are completed successfully, only 1 out of 10 large projects (greater than $10 million) are completed on time and on budget, and more than one-third of large projects are never completed at all.[3]

This is why I get worried when I see the CEO or CTO of a large company give marching orders that everything must be migrated to the cloud, the old datacenters must be shut down, and that everyone will "do DevOps" (whatever that means), all within six months. I’m not exaggerating when I say that I’ve seen this pattern several dozen times, and without exception, every single one of these initiatives has failed. Inevitably, two to three years later, every one of these companies is still working on the migration, the old datacenter is still running, and no one can tell whether they are really doing DevOps or not.

If you want to successfully adopt DevOps, or if you want to succeed at any other type of migration project, the only sane way to do it is incrementally. The key to incrementalism is not just splitting up the work into a series of small steps but splitting up the work in such a way that every step brings its own value—even if the later steps never happen.

To understand why this is so important, consider the opposite, false incrementalism.[4] Suppose that you do a huge migration project, broken up into the following steps:

-

Redesign the user interface.

-

Rewrite the backend.

-

Migrate the data.

You complete the first step, but you can’t launch it, because it relies on the new backend in the second step. So, next, you rewrite the backend, but you can’t launch that either, until you migrate the data in the third step. It’s only when you complete all three steps that you get any value from this work. This is false incrementalism: you’ve split the work into smaller steps, but you only get value when all the steps are completed, which is a huge risk. Why? Because conditions change all the time, and there’s always a chance the project gets paused or cancelled or modified part way through, before you’ve completed all the steps, and if that happens, you get the worst possible outcome: you’ve had to invest a bunch of time and money, but you will get nothing in return.

What you want instead is for each part of the project to deliver some value so that even if the entire project doesn’t finish, no matter what step you got to, it was still worth doing. The best way to accomplish this is to focus on solving one, small, concrete problem at a time. For example, instead of trying to do a "big bang" migration to the cloud, try to identify one, small, specific app or team that is struggling, and work to migrate just them. Or instead of trying to do a "big bang" move to "DevOps," try to identify a single, small, concrete problem (e.g., outages during deployment) and put in place a solution for that specific problem (e.g., automate the deployment steps).

If you can get a quick win by fixing one real, concrete problem right away, and making one team successful, you’ll begin to build momentum. This will allow you to go for another quick win, and another one after that. And if you can keep repeating this process—delivering value early and often—you’ll be far more likely to succeed at the larger migration effort. But even if the larger migration doesn’t work out, at least one team is more successful now and one deployment process works better, so it’s still worth the investment.

|

Key takeaway #2

Adopt DevOps incrementally, as a series of small steps, where each step is valuable by itself. |

Now that you have a basic background on DevOps, and you’ve seen how DevOps evolves, let’s head back to the very first step, and learn how to deploy a single app.

An Introduction to Deploying Apps

As you saw in the previous section, the first step is to run your app on a single server. How do you run an app? What’s a server? What type of server should you use? These are the questions we’ll address in this section.

The first place you should be able to deploy your app is locally, on your own computer. This is typically how you’d build the app in the first place, writing and running your code locally until you have something working.

How you run an app locally depends on the technology. Throughout this blog post series, you’re going to be using a simple Node.js sample app, as described in the next section.

Example: Run the Sample App Locally

|

Example Code

As a reminder, you can find all the code examples in the blog post series’s sample code repo in GitHub. |

Create a new folder on your computer, perhaps called something like fundamentals-of-devops, which you can use to store code for various examples you’ll be running throughout the blog post series. You can run the following commands in a terminal to create the folder and go into it:

$ mkdir fundamentals-of-devops

$ cd fundamentals-of-devopsIn that folder, create a new subfolder for the part 1 examples, and a subfolder within that for the sample app:

$ mkdir -p ch1/sample-app

$ cd ch1/sample-appThe sample app you’ll be using is a minimal "Hello, World" Node.js app, written in JavaScript. You don’t need to understand much JavaScript to make sense of the app. In fact, one of the nice things about getting started with Node.js is that all the code for a simple web app fits in a single file that’s ~10 lines long. Within the sample-app folder, create a file called app.js, as shown in Example 1:

const http = require('http');

const server = http.createServer((req, res) => {

res.writeHead(200, { 'Content-Type': 'text/plain' });

res.end('Hello, World!\n'); (1)

});

const port = 8080; (2)

server.listen(port,() => {

console.log(`Listening on port ${port}`);

});This is a "Hello, World" app that does the following:

| 1 | Respond to all requests with a 200 status code and the text "Hello, World!" |

| 2 | Listen on port 8080 for requests. |

To run it locally, you must first install Node.js. Once that’s done, you can start

the app with node app.js:

$ node app.js

Listening on port 8080You can then open http://localhost:8080 in your browser, and you should see:

Hello, World!

Congrats, you’re running the app locally!

That’s a great start, but if you want to expose your app to users, you’ll need to run it on a server, as discussed in the next section.

Deploying an App on a Server

By default, when you run an app on your computer, it is only available on localhost. This is a hostname that is configured on every computer to point back to the loopback network interface,[5] which means it bypasses any real network interface. In other words, when you’re running an app on your computer locally, it typically can only be accessed from your own computer, and not from the outside world. This is by design, and for the most part, a good thing, as the way you run apps on a personal computer for development and testing is not the way you should run them when you want to expose them to outsiders.[6]

|

Key takeaway #3

You should never expose apps running on a personal computer to the outside world. |

Instead, if you’re going to expose your app to the outside world, you should run it on a server. A server is a computer specifically designed for running apps and exposing those apps to the outside world. There are many differences between a server and a personal computer, including the following:

- Security

-

When you run your app on a server, you typically use a stripped down operating system (OS) that has nothing else installed on it that is not absolutely necessary for running the app. You also use a variety of server hardening techniques to protect that server, including OS permissions, firewalls, intrusion prevention tools, file integrity monitoring, sandboxing tools, and so on. On the other hand, your personal computer is likely not nearly as hardened, has all sorts of other apps installed (the more apps that you run, the more likely it is that one of them has a vulnerability somewhere that an attacker could exploit if you expose your computer on the public Internet), and also may have your personal data on it (e.g., documents, photos, videos, passwords) which you really wouldn’t want to leak to the world.

- Availability

-

You may shut off your personal computer at any time, or the battery might die, or the power might go out. Most servers are designed to be on all the time, in data centers with redundant power. Moreover, production apps are typically deployed across multiple servers, and sometimes across multiple data centers, in case one of them goes down.

- Performance

-

If you’re using your personal computer for personal tasks (e.g., writing code, browsing the Internet, playing games), that will take away system resources (CPU, memory, bandwidth, etc.) from your app, possibly causing performance issues for your users. Servers are typically dedicated solely to running the app installed on them.

- Collaboration

-

Most apps that you build are worked on by a team of developers and not just one person. Those other developers will want access to that app, either to update it or to debug an issue, but they typically don’t have any way to access your personal computer—and nor should they. On the other hand, servers are usually configured in such a way that your entire team has a way to push updates to them, connect to the server to debug issues, and so on.

Despite all the drawbacks of running apps on personal computers, it’s astonishing just how many businesses have critical apps running on single computer sitting under someone’s desk. This typically happens for one of three reasons:

-

Your company is resource constrained (e.g., a tiny startup), and you can’t afford to buy proper servers.

-

The person running the app doesn’t know any better.

-

The company’s software delivery process is so slow and cumbersome that the only way to get something running quickly is to sneak it onto an office computer.

As you’ll see later in this blog post, these days, there are far better options for dealing with reason (1). And hopefully, after reading this blog post series, reasons (2) and (3) will no longer apply, as you now know better, and will learn how to create a software delivery process that allows your team to quickly & easily run their apps the right way: on a server.

Broadly speaking, there are two ways to get access to servers:

-

You can buy and set up your own servers ("on prem").

-

You rent servers from others ("the cloud").

We’ll discuss each of these in the next section.

Deploying On Prem Versus in the Cloud

The traditional way to run software is to buy your own servers and to set them up on-premises (on-prem for short), in a physical location you own. When you are just starting out, the location could be as simple as a closet in your office with a handful of servers crammed into it—barely one step up from using a personal computer. But as a company’s user base grows, so do the computing demands, and you eventually have to move to a dedicated data center, with all the requisite equipment (e.g., cabinets, racks, servers, hard drives, wiring, cooling, power, and so on) and staff (e.g., data center technicians, electricians, engineers, network administrators, security guards, and so on). So for decades, if you wanted to build a software company, you also had to invest quite a bit into hardware.

This all started to change in the early 2000s. At that time, Amazon was already a huge web retailer, and like all other software companies, they had built massive data centers to run their products. The company was growing quickly, but despite hiring many new software engineers, they found they weren’t building their software any faster. When they dug into the problem, they found a common complaint: for each new project, every team in Amazon was spending a huge amount of time not writing the software, but building out hardware (e.g., servers, databases, etc.) to run that software. The types of hardware the teams needed were often similar, so all these teams were spinning their wheels on the same type of "undifferentiated heavy lifting."

To solve this problem, Amazon started to build an internal platform that could abstract away and automate a lot of this undifferentiated heavy lifting, giving developers access to the hardware they needed through a software interface. At some point along the way, they realized that this platform could help not only their own internal dev teams, but dev teams from outside Amazon as well. The result was the launch of Amazon Web Services (AWS) in 2006, the first cloud computing platform (cloud for short), which allowed you to rent servers from Amazon using a software interface.[7]

This is a profound shift. Instead of having to invest lots of time and money up front to set up servers yourself, in a few clicks, you can rent servers from the cloud. You can be up and running in seconds instead of weeks or months, at the cost of a few cents (or even free) instead of thousands of dollars. These days, while AWS is still the dominant player in cloud computing, there are dozens of other companies that offer cloud services as well, and these services have expanded beyond simply renting servers: you can now rent higher level services, such as databases, file stores, machine learning systems, natural language processing, edge computing, and more.

So which should you use? Should you go with on-prem or make the jump to the cloud?

When to Go with the Cloud

If you’re starting something new, in the majority of cases, you should go with the cloud. Here are just a few of the advantages:

- Pay-as-you-go

-

With on-prem, you have to buy all the hardware (servers, racks, wiring, cooling, etc) up front, which can be a huge expense. With the cloud, you only pay for what you use, which is typically extremely cheap or even free in the early days (most cloud providers have free tiers or free credits when you’re starting out), when you have no users, and only grows if your business and usage grows too.

- Maintenance and expertise

-

Deploying your own servers requires hiring experts in hardware, data centers, cooling, power, etc. Moreover, data centers need to be maintained constantly, and the hardware you buy needs to be replaced over time, either because it breaks or goes obsolete. The cloud gives you all this expertise and maintenance out-of-the-box, at a scale most individual companies can’t come close to matching.

- Speed

-

With on-prem, every time you need new hardware, you have to find it, order it, pay for it, wait for it to ship, assemble it, rack it, test, it, etc. This typically takes weeks or months, and the more you need to buy, the longer it takes. With the cloud, virtual servers launch in seconds or minutes, whether you need to launch one or thousands.

- Elasticity

-

With on-prem, you have to buy all the hardware up front, which means you have to plan long in advance. If you suddenly need an extra 5,000 CPUs, you have no easy way to scale up to meet that capacity; and if you do buy that hardware, but you only need those 5,000 CPUs for a short period of time, you still pay the full cost. With the cloud, you can scale up and down elastically, at will. If you suddenly need 5,000 CPUs, you can get that in minutes. And once you’re done with them, you can scale back to 0, and pay nothing more.

- Managed services

-

With on-prem, you buy servers, and everything else is up to you. With the cloud, you not only get access to servers, but also dozens of other powerful managed services, such as managed databases, load balancers, file stores, data analytics, networking, machine learning, and much more. Building these in-house would take years and cost lots of money, whereas with the cloud, you get access to it with the same pay-as-you go model, often starting for free or extremely low cost.

- Security

-

With on-prem, all security is up to you, and most companies don’t have the time or expertise to do it well. With the cloud, the cloud providers can afford to invest huge amounts of time, money, and expertise in security. AWS, for example, has gone through a huge range of security audits and pen testing, is compliant with a variety of compliance standards (PCI, HIPAA, CIS, etc.), has passed security requirements for even the most highly regulated industries (government, military, financial, health care), is recognized as a leader in data center physical security, and so on. There is a persistent rumor that on-prem is more secure than the cloud, because only you have access to it, and you have all the incentives to keep it secure, but in practice, unless you work at a company that’s at the scale of one of the major cloud providers (Amazon, Microsoft, Google), you simply won’t have the resources to secure your data centers the way those companies can.

- Global reach

-

With on-prem, you have to have a team physically located at your data center to build and maintain it. And if you need to span the globe and have multiple data centers, then you need to build up and maintain a physical presence in multiple locations around the world. With the cloud, your team can be anywhere in the world (as long as you have an Internet connection) and get instant access to dozens of data centers around the world.

- Scale

-

The cloud industry is massive and growing at an incredible rate. For example, as of the end of 2023, AWS alone (not including the rest of Amazon’s businesses) is a $100 billion per year run-rate business. Yup, $100 billion per year. That’s billion with a "B." That’s not a typo. And they are still growing fast. The amount of money that major cloud providers can invest in their offerings utterly dwarfs what almost any other company could ever hope to invest into an on-prem deployment. So the cloud is not only already way ahead of where you could get to today with on-prem, but that lead will only widen over time.

The cloud is more or less the de facto option for all new startups that launch today. The vast majority of existing companies are establishing a presence in the cloud as well, often preferring it for all new development.

|

Key takeaway #4

Using the cloud should be your default choice for most new deployments these days. |

So with all these advantages of using the cloud, does it ever make sense to go with on-prem?

When to Go with On-Prem

There are a number of cases where running servers yourself on-prem still makes sense:

- You already have an on-prem presence

-

If it ain’t broke, don’t fix it. If your company has been around a while and already has its own data centers—you’ve already made all the up-front investments, and things are working well for you—then sticking with on-prem may make sense. Even if your on-prem deployment isn’t working especially well, and you can see the benefits of using the cloud (e.g., increased agility for your company), bear in mind that migrating from on-prem to the cloud can be hugely expensive and time-consuming, and those costs can outweigh any potential benefits.

- You have load patterns that are a better fit for on-prem

-

Certain load patterns may be a better fit for on-prem than the cloud. For example, some cloud providers charge a lot of money for bandwidth and disk space usage, so if your apps are sending or storing huge amounts of data, you may find it cheaper to run servers on-prem (even when factoring in all the up-front and on-going costs). Another example is if your company is large enough that you can afford to buy and manage your own servers, and you have apps that handle a lot of traffic, but that traffic is fairly steady and predictable. In that case, you aren’t benefiting as much from the elasticity of the cloud, and may find it cheaper to run things on prem.[8] One final example is if you need access to very specific hardware (e.g., certain types of CPUs, GPUs, or hard-drives), you can’t always get that in the cloud, and may have to buy it yourself. But a word of warning: it’s easy to get the cost comparison wrong. You have to factor in not only what you pay for servers, bandwidth, and disk space, but also costs such as power, cooling, redundancy, data center employees, the need to replace your own hardware over time, and so on.

- You need more control over your data centers

-

When you use the cloud, you hand over a lot of control to cloud vendors. In some cases, you might not want to give these vendors that much power. For example, if your business competes with the cloud vendor, which isn’t too unlikely given the larger cloud vendors (e.g., Amazon, Google, and Microsoft) compete in dozens of different industries, you might not want them having control over your infrastructure. You may also be wary of the potential for cloud vendors to suddenly change pricing. For the most part, the fierce competition between the major cloud vendors these days has driven prices down, rather than up, but there have been plenty of examples of sudden price increases: for example, Google Cloud increased storage pricing by as much as 50% in 2022 and Vercel made a number of pricing changes in 2024, most of which reduced costs for their users, but a small percentage of users saw costs increase by as much as 10x.

A 50% or 1000% increase in pricing can be catastrophic for a business. One way to avoid it is to avoid the cloud entirely; another is to be very picky with which cloud vendors you use; and one more option is to negotiate long-term contracts. The one option I would avoid is to try to design your infrastructure to avoid vendor lock-in: that is, you avoid using any services that are proprietary to the vendor, so you can "easily" migrate away from that vendor if necessary. For just about any non-trivial deployment, you are locked-in to any toolset you pick, and if you have to migrate, it will never be easy. If you deploy into the cloud and use every cloud-native service, you’ll be locked-in to those services, most of which won’t be available if you have to migrate anywhere else; if you deploy into the cloud, avoid the cloud-native services, and build your own bespoke tooling instead, now you’ll be locked-in to your own tooling, most of which won’t work anywhere else.

In most cases, trying to avoid vendor lock-in has a high cost and low pay off: your initial deployment will take

Nmonths longer, and in the rare case that you have to migrate in the future, you might save as much asNmonths on that migration. So in the absolute best case, you pay a higher cost now to maybe break even later, which is a bad trade-off. But it’s likely even worse than this, as reality tends to function like the second law of thermodynamics: some energy is always lost. In between the initial deployment and your potential future migration, your software and hardware will evolve and change, and some degree of lock-in to the vendor will almost certainly happen. As a result, you’ll save far less thanNmonths on future migrations. So unless you end up migrating many times, you’re typically far better off picking the best tooling available—e.g., if you’re deploying to the cloud, using cloud-native tooling—and getting live as quickly as possible. - You have compliance requirements that are a better fit for on-prem

-

Certain types of compliance standards, regulations, laws, auditors, and even customers, have not yet adapted to the world of the cloud yet, so depending on the industry you’re in, and the types of products you’re building, you may find that on-prem is a better fit.

It’s worth mentioning that it doesn’t have to be cloud vs on-prem; it can also be cloud and on-prem, as discussed next.

When to Go with Hybrid

A hybrid deployment is when you use a mixture of cloud and on-prem. The most common use cases for this are:

- Partial cloud migration

-

Many companies that have on-prem deployments are adopting the cloud, but not always 100%. They might migrate some of their apps to the cloud, and develop most new apps in the cloud, but they keep some apps on-prem, either temporarily (as the migration happens, which can take years) or permanently (some apps are not worth the cost to migrate).

- Right tool for the job

-

The cloud can be a better fit for certain types of software than others. For example, if you have an app with traffic patterns that can spike wildly up and down (e.g., an ecommerce app with spikes on certain holidays), you might choose to run that app in the cloud to take advantage of the cloud’s elasticity; and if you have another app with steady traffic patterns that uses lots of disk space and bandwidth, you may choose to run that app on-prem to save on costs. In short, pick the right tool for the job!

My goal with this blog post series is to allow as many readers as possible to try out the examples, and to do so as quickly and cheaply as possible, so the cloud—especially cloud services with free tiers—is the right tool for this job. So while most of the underlying concepts will apply to both on-prem and the cloud, most of the examples in this series will use the cloud.

Broadly speaking, there are two types of cloud computing offerings:

-

Platform as a Service (PaaS)

-

Infrastructure as a Service (IaaS)

We’ll discuss each of these in the next section.

Deploying An App Using PaaS

One of the first cloud computing offerings from AWS was Elastic Compute Cloud (EC2), which came out in 2006, and allowed you to rent servers from AWS. This was the first Infrastructure as a Service (IaaS) offering, which gives you access directly to computing resources, such as servers. This dramatically reduced the time it took to do the hardware aspects of operations (e.g., buying and racking servers), but it didn’t help as much with the software aspects of operations, such as installing the app and its dependencies, setting up databases, configuring networking, and so on.

About a year later, in 2007, a company called Heroku came out with one of the first Platform as a Service (PaaS) offerings.[9] The key difference with PaaS is that the focus is on higher level primitives: not just the underlying infrastructure (i.e., servers), but also application packaging, deployment pipelines, database management, and so on.

The difference between IaaS and PaaS isn’t so black and white; it’s really more of a continuum. Some PaaS offerings are higher level than Heroku; some are lower level; many IaaS providers have PaaS offerings too (e.g., AWS offers Elastic Beanstalk, GCP offers App Engine); and so on. The difference really comes down to this: PaaS gives you a full, opinionated software delivery process; IaaS gives you low-level primitives to create your own software delivery process.

The best way to get a feel for the difference is to try it out. You’ll try out a PaaS in this section and an IaaS in the next section.

There are many PaaS offerings out there, including Heroku, Fly.io, Vercel, Firebase, Render, Railway, Platform.sh, and Netlify. For the examples in this blog post series, I wanted a PaaS that met the following requirements:

-

There is a free tier or free credits so that readers can try it out at no cost.

-

You can deploy application code, without having to set up a build system, framework, Docker image, etc. (these are topics you’ll learn about later in the series).

-

You can deploy directly from a folder, without having to mess with version control (also a topic you’ll see later in the series).

Fly.io is the best fit for these criteria: it offers

free usage allowances on all their plans, and new

customers get $5 in free credits to cover any

initial usage costs beyond the free allowances, so running the examples in this blog post series shouldn’t cost you

anything; they support Buildpacks for automatically packaging code for deployment (no need to

worry about how this works now, we’ll come back to packaging in Part 2); and they provide

a command-line tool called flyctl that lets you deploy code straight from your computer.

Example: deploying an app using Fly.io

Here’s what you need to do to use Fly.io to deploy the sample app:

Step 1: Install flyctl. This will give you the fly

CLI tool, which you can use to manage and deploy your apps.

Step 2: Sign up for a Fly.io account. Run the fly auth command:

$ fly auth signupThis will pop open your web browser to a sign-up form. Note that you will need to enter a credit card, but as mentioned before, the examples in this blog post series shouldn’t cost you anything.

If you already have a Fly.io account, login by running:

$ fly auth loginStep 3: Configure the build. Create a file called fly.toml in the same sample-app folder as your app.js file with the contents shown in Example 2:

[build]

builder = "paketobuildpacks/builder:base" (1)

buildpacks = ["gcr.io/paketo-buildpacks/nodejs"] (2)

[http_service]

internal_port = 8080 (3)

force_https = true

auto_stop_machines = true

auto_start_machines = true

min_machines_running = 0Normally, with real-world applications, you wouldn’t need this config file, as Fly.io can recognize many popular app frameworks automatically, but to keep this first blog post as simple as possible, I’ve intentionally omitted a lot of code from the sample app that you’d have in a real app, so you need to help Fly.io with a few hints:

| 1 | Use Buildpacks to package the app. |

| 2 | Use the Node.js Buildpack specifically. |

| 3 | Send requests to the app on port 8080. |

Step 4: Launch the app. Run fly launch:

$ fly launch --generate-name --copy-config --yesThis will kick off a process that builds and deploys your app. It should take about five minutes the very first time. When it’s done, you should see log output that contains the URL for your deployed app:

Visit your newly deployed app at https://<NAME>.fly.dev/

Where <NAME> is a randomly generated name for your app deployment. Open that URL up in your web browser, and you

should see:

Hello, World!

And there you go! In handful of commands, you now have your app running on a server.

|

Get your hands dirty

Here are a few exercises you can try at home to get a better feel for what you get out-of-the-box with a PaaS such as Fly.io:

|

When you’re done experimenting with Fly.io, you should undeploy your app using the following command:

$ fly apps destroy <NAME>Where <NAME> is the auto-generated name for your app (which shows up in all the log output and the URL of your app).

Running destroy ensures that your account doesn’t start accumulating any unwanted charges.

How PaaS stacks up

Using a PaaS typically means you get not just a server, but a lot of powerful functionality out-of-the-box, such as the following:

- Multiple servers

-

Fly.io can easily scale your app across multiple servers, which can help with scalability and high availability. You’ll learn more about this topic in Part 3.

- Domain names

-

Fly.io automatically creates a custom subdomain for your app (e.g.,

<NAME>.fly.dev); it also supports custom top-level domain names (e.g.,your-domain.com). You’ll learn more about this topic in Part 7 [coming soon]. - TLS certificates and termination

-

Note how the Fly.io URL is https, not http. This means Fly.io also takes care of TLS certificates and TLS termination, a topic you’ll learn more about in Part 8 [coming soon].

- Monitoring

-

Fly.io automatically aggregates logs and metrics in a single place, which is helpful in troubleshooting and understanding the status of your app. You’ll learn more about this topic in Part 10 [coming soon].

- Automated deployment

-

Fly.io can automatically roll out updates to your app with zero downtime. You’ll learn more about this topic in Part 5.

This is the power of PaaS: in a matter of minutes, a good PaaS can take care of so many software delivery concerns for you. It’s like magic. And that’s the greatest strength of PaaS: it just works!

Except when it doesn’t. When that happens, this very same magic becomes the greatest weakness of PaaS. By design, with a PaaS, just about everything is happening behind the scenes, so if something doesn’t work, it can be very hard to debug it or fix it. Moreover, to make the magic possible, most PaaS offerings have a number of limitations: e.g., limitations on what you can deploy, what types of apps you can run, what sort of access you can have to the underlying hardware, what sort of hardware is available, and so on. If the PaaS doesn’t support it—if the CLI or UI the PaaS provides doesn’t expose the ability to do something you need—you typically can’t do it at all.

As a result, while many projects start on PaaS, if they grow big enough and require more control, they end up migrating to IaaS, which is the topic of the next section.

Deploying an App Using IaaS

Broadly speaking, the IaaS space falls into three buckets:

- Virtual private server (VPS)

-

There are a number of companies who primarily focus on giving you access to a virtual private server (VPS) for as cheap as possible. These companies might offer a few other features (e.g., networking, storage) as well, but the main reason you’d go with one of these providers is that you just want a replacement for having to rack your own servers, and prefer to have someone else do it for you, and give you access. Some of the big players in this space include Hetzner, DigitalOcean, Vultr, Linode (now known as Akamai Connected Cloud after the acquisition), BuyVM, First Root, and OneProvider

- Content delivery networks (CDNs)

-

There are also a number of companies who primarily focus on content delivery networks (CDNs), which are servers that are distributed all over the world, typically for the purpose of serving and caching content (especially static assets, such as images, JavaScript, and CSS) close to your users (you’ll learn more about CDNs in Part 9 [coming soon]). Again, these companies might offer a few other features (e.g., protection against attacks), but the main reason you’d go with one of these providers is that your user base is geographically distributed, and you need a fast and reliable way to serve them content with low latency. Some of the big players in this space include CloudFlare, Akamai, Fastly, and Imperva.

- Cloud providers

-

There are also a small number of very large companies trying to provide all-purpose cloud solutions that offer everything: VPS, CDN, containers, serverless, data storage, file storage, machine learning, natural language processing, edge computing, and more. The big players in this space include Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP), Alibaba Cloud, IBM Cloud, and Oracle Cloud Infrastructure (OCI).

In general, the VPS and CDN providers are specialists in their respective areas, so they will typically beat a general cloud provider in terms of features and pricing in those areas: for example, a VPS from Hetzner is usually much faster and cheaper than one from AWS. So if you only need those specific items, you’re better off going with a specialist. However, if you’re building the infrastructure for an entire company, especially one that is in the later stages of its DevOps evolution, your architecture usually needs many types of infrastructure, and the general-purpose cloud providers will typically be a better fit, as they can give you a one-stop-shop to meet all your needs.

For the examples in this blog post series, I wanted an IaaS provider that met the following requirements:

-

There is a free tier or free credits so that readers can try it out at no cost.

-

It should be one of the more popular cloud providers, so you’re learning something you’re more likely to use at work.

-

It should provide a wide range of cloud services that support the many DevOps and software delivery examples in the rest of this blog post series.

AWS is the best fit for these criteria: it offers a generous free tier, so running the examples in this blog post series shouldn’t cost you anything; it’s the most popular cloud provider, with a 31% share of the market; and it provides a huge range of reliable and scalable cloud-hosting services, including servers, serverless, containers, databases, machine learning, and much more.

Example: deploying an app using AWS

Here’s what you need to do to use AWS to deploy the sample app:

Step 1: Sign up for AWS. If you don’t already have an AWS account, head over to https://aws.amazon.com and sign up. When you first register for AWS, you initially sign in as the root user. This user account has access permissions to do absolutely anything in the account, so from a security perspective, it’s not a good idea to use the root user on a day-to-day basis. In fact, the only thing you should use the root user for is to create other user accounts with more-limited permissions, and then switch to one of those accounts immediately, as per the next step. Make sure to store the root user credentials in a secure password manager, such as 1Password (you’ll learn more about secrets management in Part 8 [coming soon]).

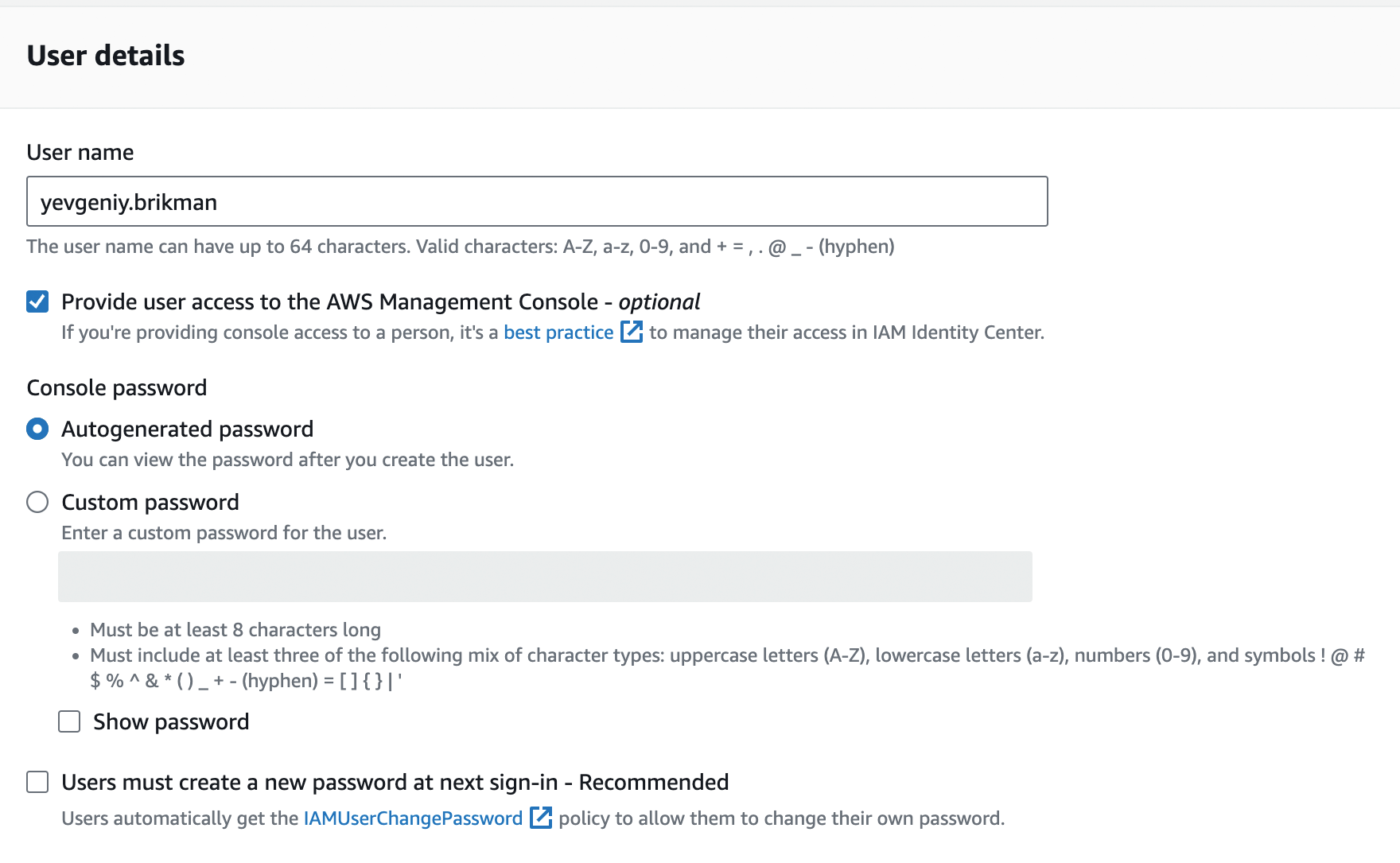

Step 2: Create an IAM user. To create a more-limited user account, you will need to use the Identity and Access Management (IAM) service. IAM is where you manage user accounts as well as the permissions for each user. We’ll go into more detail on this topic in Part 8 [coming soon], but for now, we’ll do the simplest thing we can to get started. To create a new IAM user, go to the IAM Console (note: you can use the search bar at the top of the AWS Console to find services such as IAM), click Users, and then click the Create User button. Enter a name for the user, and make sure "Provide user access to the AWS Management Console" is selected, as shown in Figure 10 (note that AWS occasionally makes changes to its web console, so what you see may look slightly different from the screenshots in this blog post series).

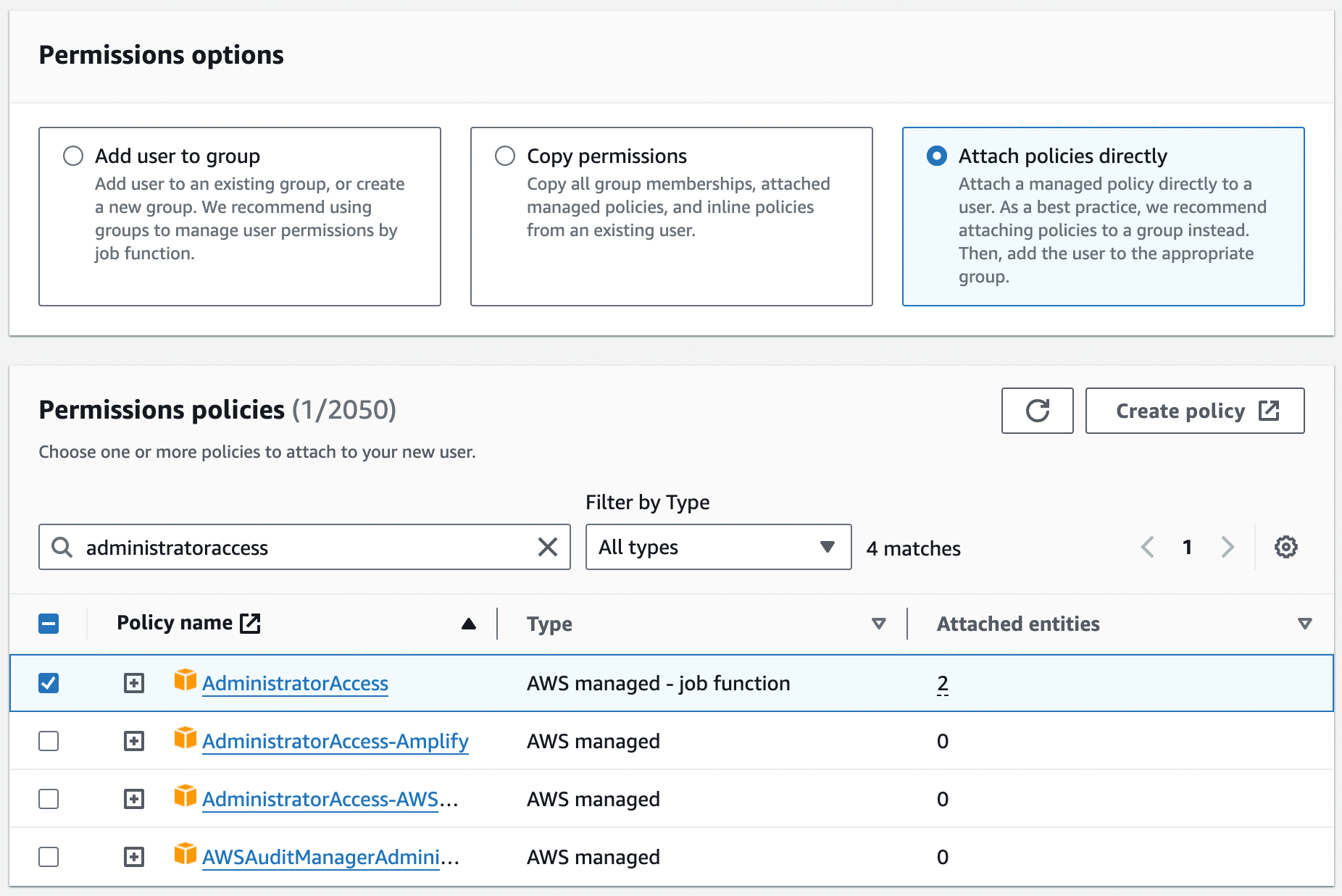

Click the Next button. AWS will ask you to add permissions to the user. By default, new IAM users have no permissions whatsoever and cannot do anything in an AWS account. To give your IAM user the ability to do something, you need to associate one or more IAM Policies with that user’s account. An IAM Policy is a JSON document that defines what a user is or isn’t allowed to do. You can create your own IAM Policies or use some of the predefined IAM Policies built into your AWS account, which are known as Managed Policies.

To run the examples in this blog post series, the easiest way to get started is to select "Attach policies directly"

and add the AdministratorAccess Managed Policy to your IAM user (search for it, and click the checkbox next to it),

as shown in Figure 11.[10]

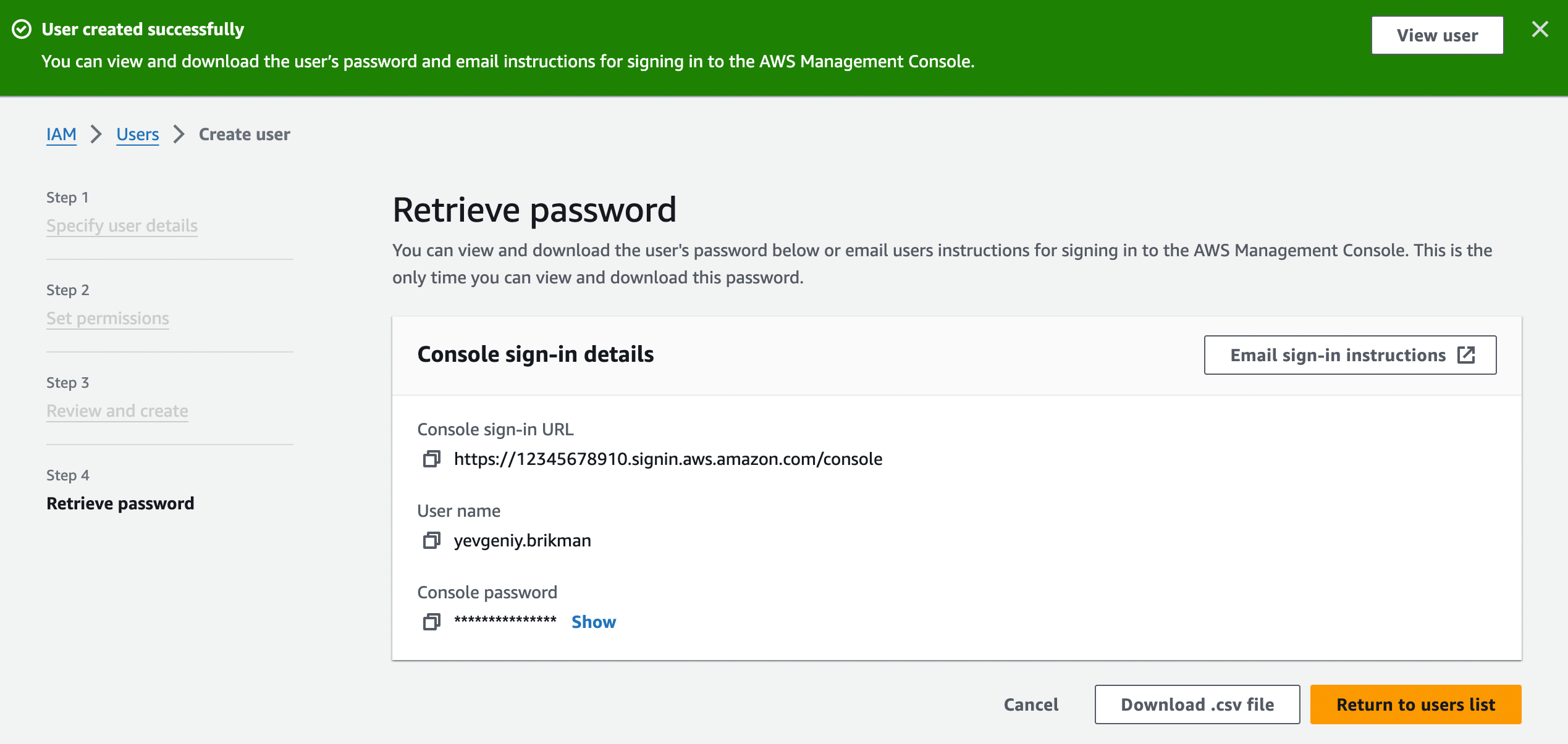

AdministratorAccess Managed IAM Policy to your new IAM user.Click Next again and then the "Create user" button. AWS will show you the security credentials for that user, which consist of (a) a sign-in URL, (b) the user name, and (c) the console password, as shown in Figure 12. Save all three of these immediately in a secure password manager (e.g., 1Password), especially the console password, as it will never be shown again.

Step 3: Login as the IAM user. Now that you’ve created an IAM user, log out of your AWS account, go to the sign-in URL you got in the previous step, and enter the user name and console password from that step to sign in as the IAM user.

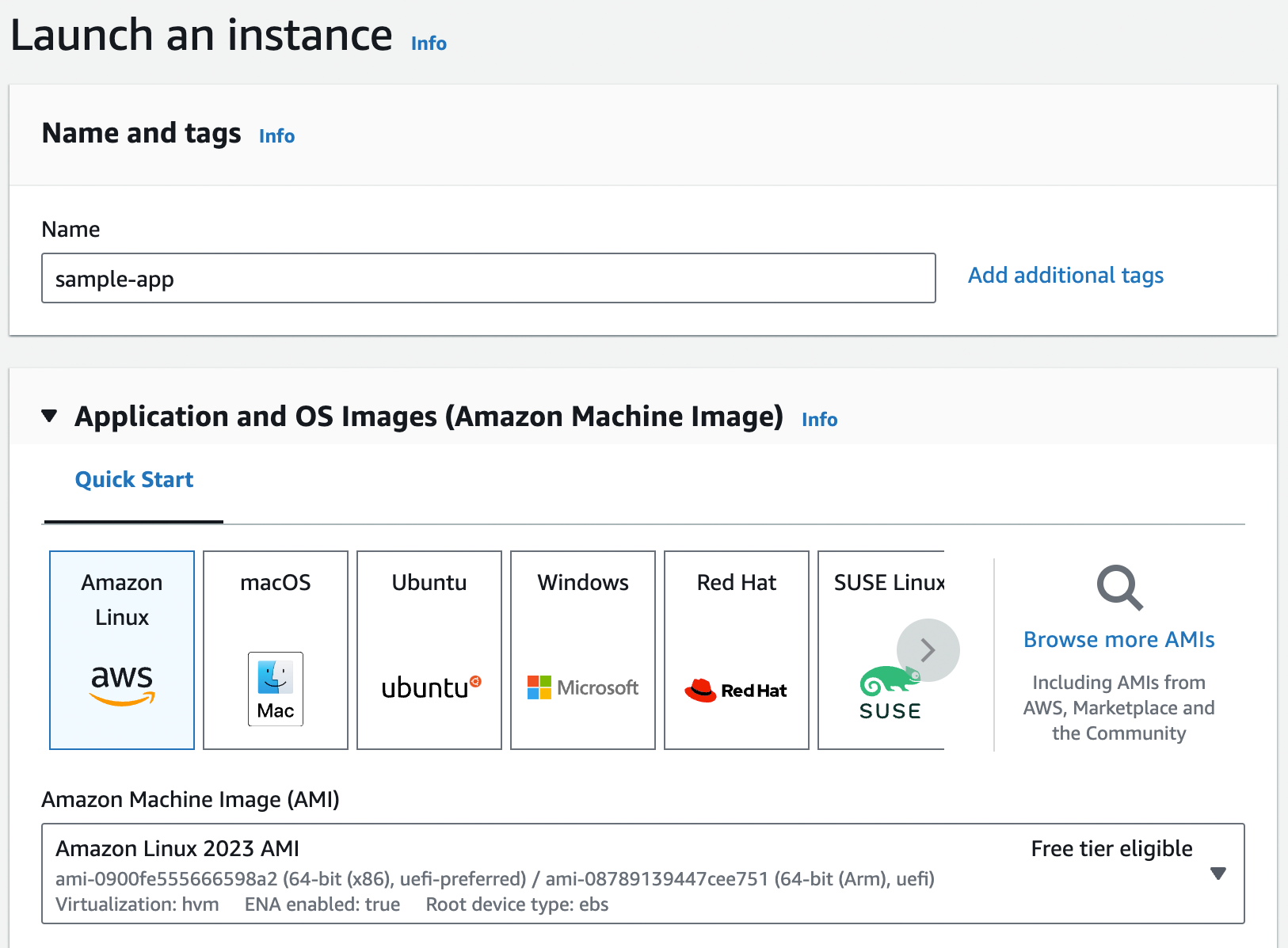

Step 4: Deploy an EC2 instance. Now you’re finally ready to deploy a server. You can do this through the AWS Elastic Compute Cloud (EC2) service, which lets you launch servers called EC2 instances. Head over to the EC2 Console and click the "Launch instance" button (as of April, 2024, it’s an orange button in the middle of the page). This will take you to a page where you configure your EC2 instance, as shown in Figure 13.

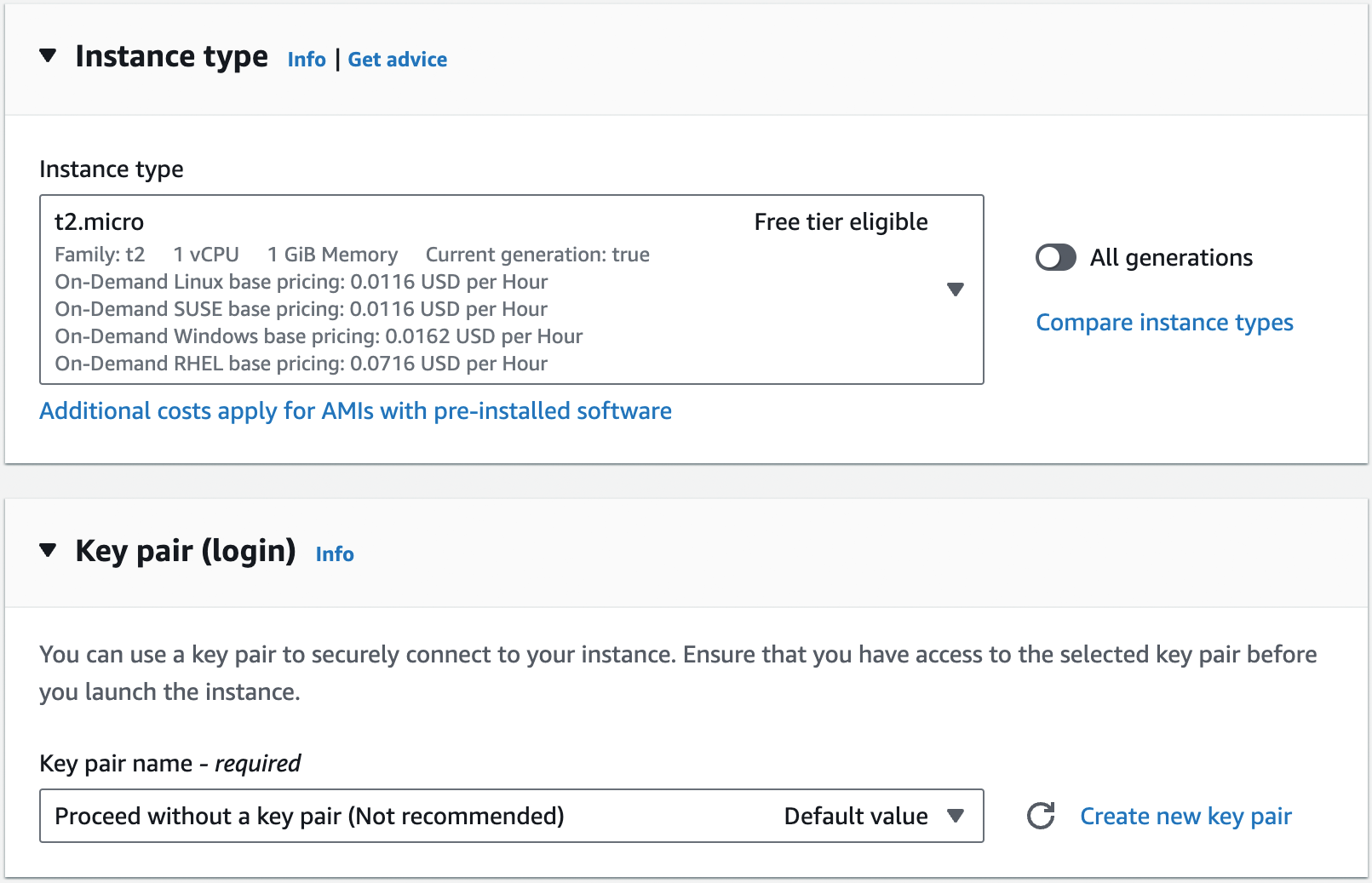

Fill in a name for the instance. Below that, you need to pick the operating system image to use (the Amazon Machine Image or AMI), which is a topic we’ll come back to in Part 2. For now, you can stick with the default, which should be Amazon Linux. Below that, you’ll need to configure the instance type and key pair, as shown in Figure 14.

The instance type specifies what type of server to use: that is, what sort of CPU, memory, hard drive, etc. it’ll

have. For this quick test, you can use the default, which should be t2.micro or t3.micro, small instances (1 CPU,

1GB of memory) that are part of the AWS free tier. The key pair can be used to connect to the EC2 instance via SSH, a

topic you’ll learn more about in Part 7 [coming soon]. You’re not going to be using SSH for this example, so

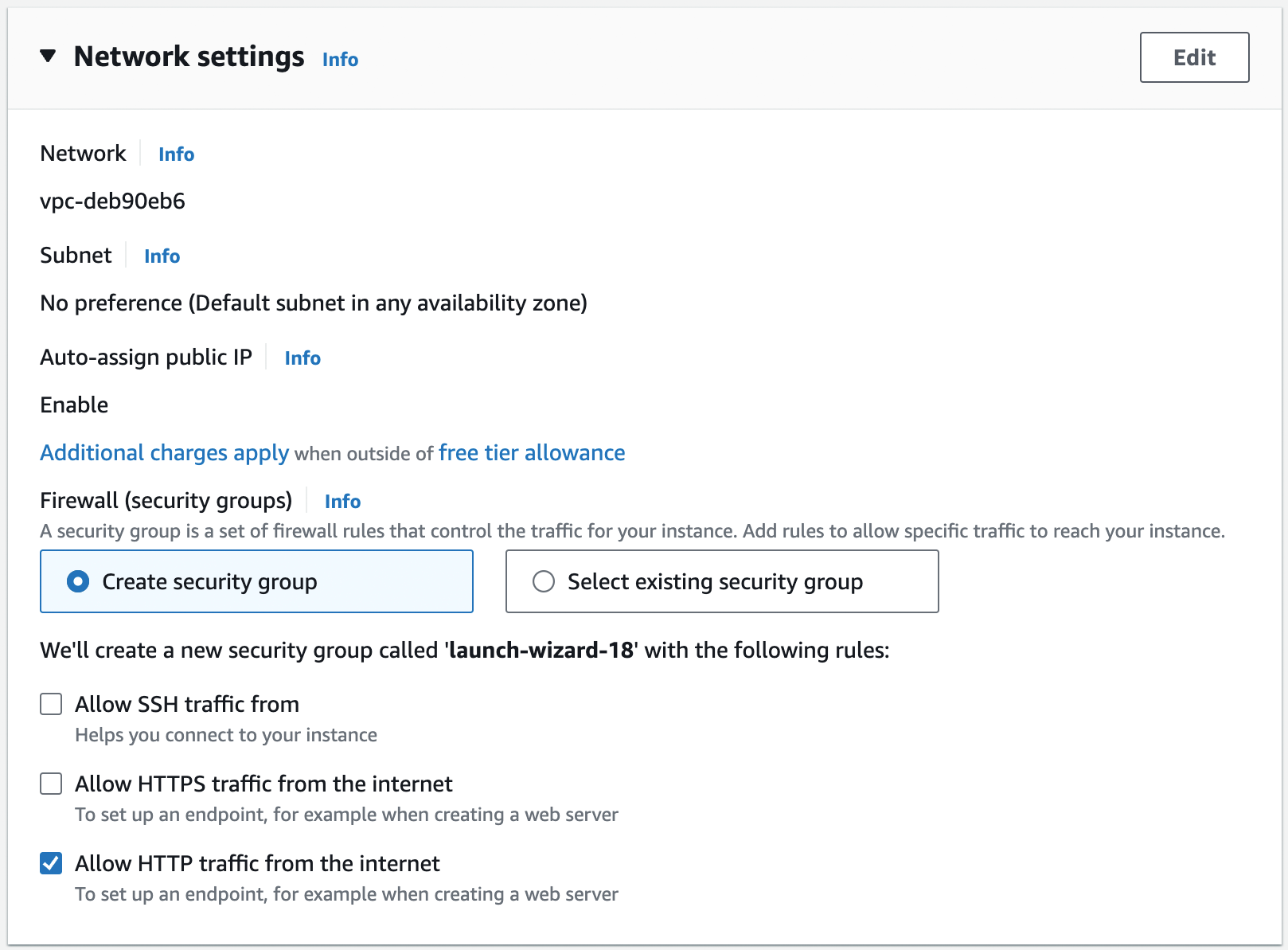

select "Proceed without a key pair." Scroll down to the network settings, as shown in Figure 15.

You’ll learn about networking in Part 7 [coming soon]. For now, you can leave most of these settings at their

defaults: the Network should pick your Default VPC (in Figure 15, my Default VPC has the ID

vpc-deb90eb6, but your ID will be different), the Subnet should be "No preference," and Auto-assign public IP should

be "Enable." The only thing you should change is the Firewall (security groups) setting, selecting the "Create

security group" radio button, disabling the "Allow SSH traffic from" setting, and enabling the "Allow HTTP traffic

from the internet" setting, as shown in Figure 15. By default, EC2 instances have firewalls,

called security groups, that don’t allow any network traffic in our out. Allowing HTTP traffic tells the security

group to allow inbound TCP traffic on port 80 so that the sample app can receive requests and respond with "Hello,

World!"

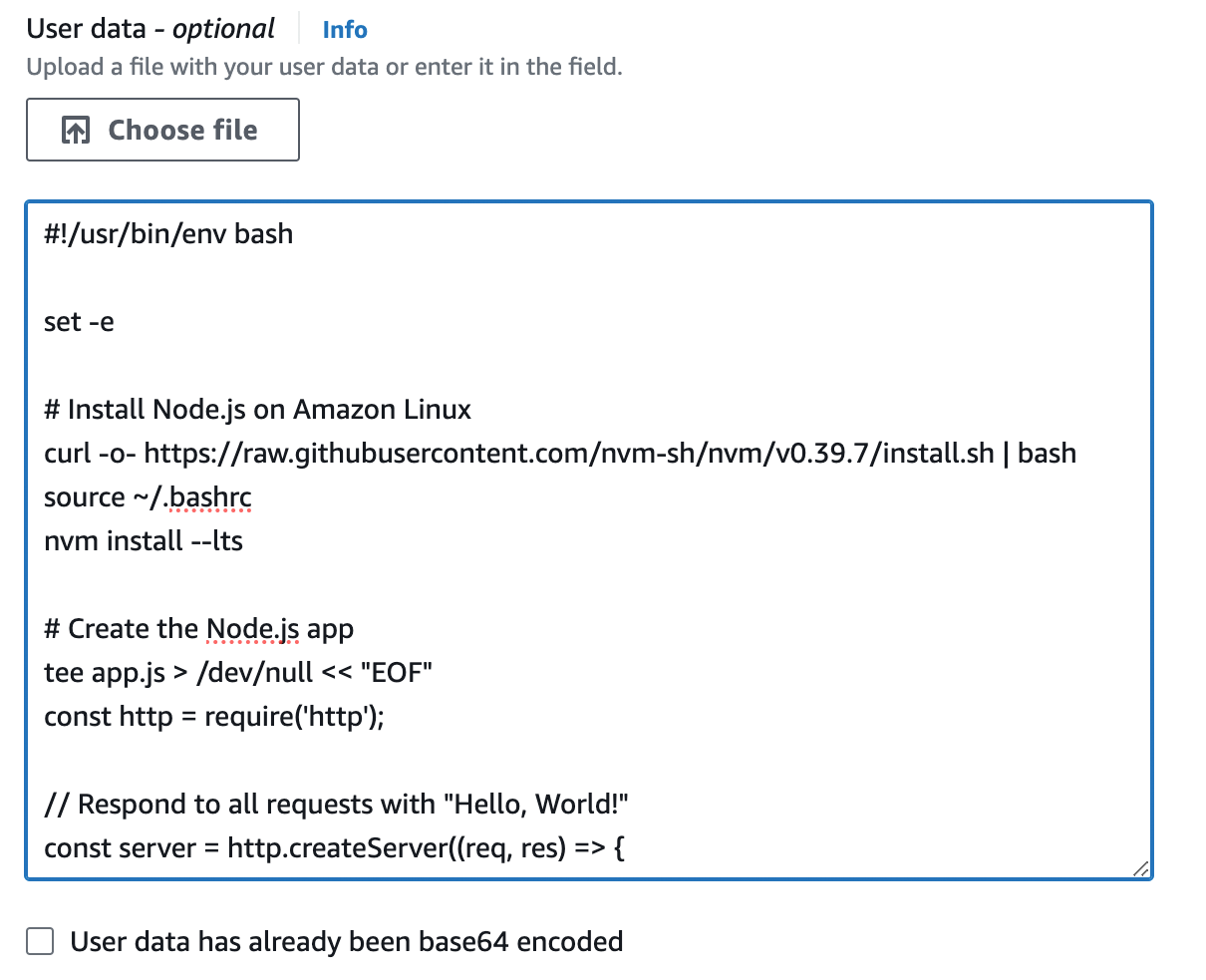

Next, open up the "Advanced details" section, and scroll down to "User data," as shown in Figure 16.

User data is a script that will be executed by the EC2 instance the very first time it boots up. Copy and paste the following script into user data:

#!/usr/bin/env bash

set -e

(1)

curl -fsSL https://rpm.nodesource.com/setup_21.x | bash -

yum install -y nodejs

(2)

tee app.js > /dev/null << "EOF"

const http = require('http');

const server = http.createServer((req, res) => {

res.writeHead(200, { 'Content-Type': 'text/plain' });

res.end('Hello, World!\n');

});

(3)

const port = 80;

server.listen(port,() => {

console.log(`Listening on port ${port}`);

});

EOF

(4)

nohup node app.js &You should also save a local copy of this script in ch1/ec2-user-data-script/user-data.sh, so you can reference it later. This Bash script will do the following when the EC2 instance boots:

| 1 | Install Node.js. |

| 2 | Write the sample app code to a file called app.js. This is the same Node.js code you saw earlier in the blog post, with one difference, as described next. |

| 3 | The only difference from the sample app code you saw earlier is that this code listens on port 80 instead of 8080, as that’s the port you opened up in the security group. |

| 4 | Run the app using node app.js, just like you did on your own computer. The only difference is the use of nohup

and ampersand (&), which allows the app to run permanently in the background, while the Bash script itself can

exit. |

|

Watch out for snakes: these examples have several problems

The approach shown here with user data has a number of drawbacks, as explained in Table 4:

You’ll see how to address all of these limitations later in the blog post series. Since this is just a first example for learning, it’s OK to use this simple, insecure approach for now, but make sure not to use this approach in production! |

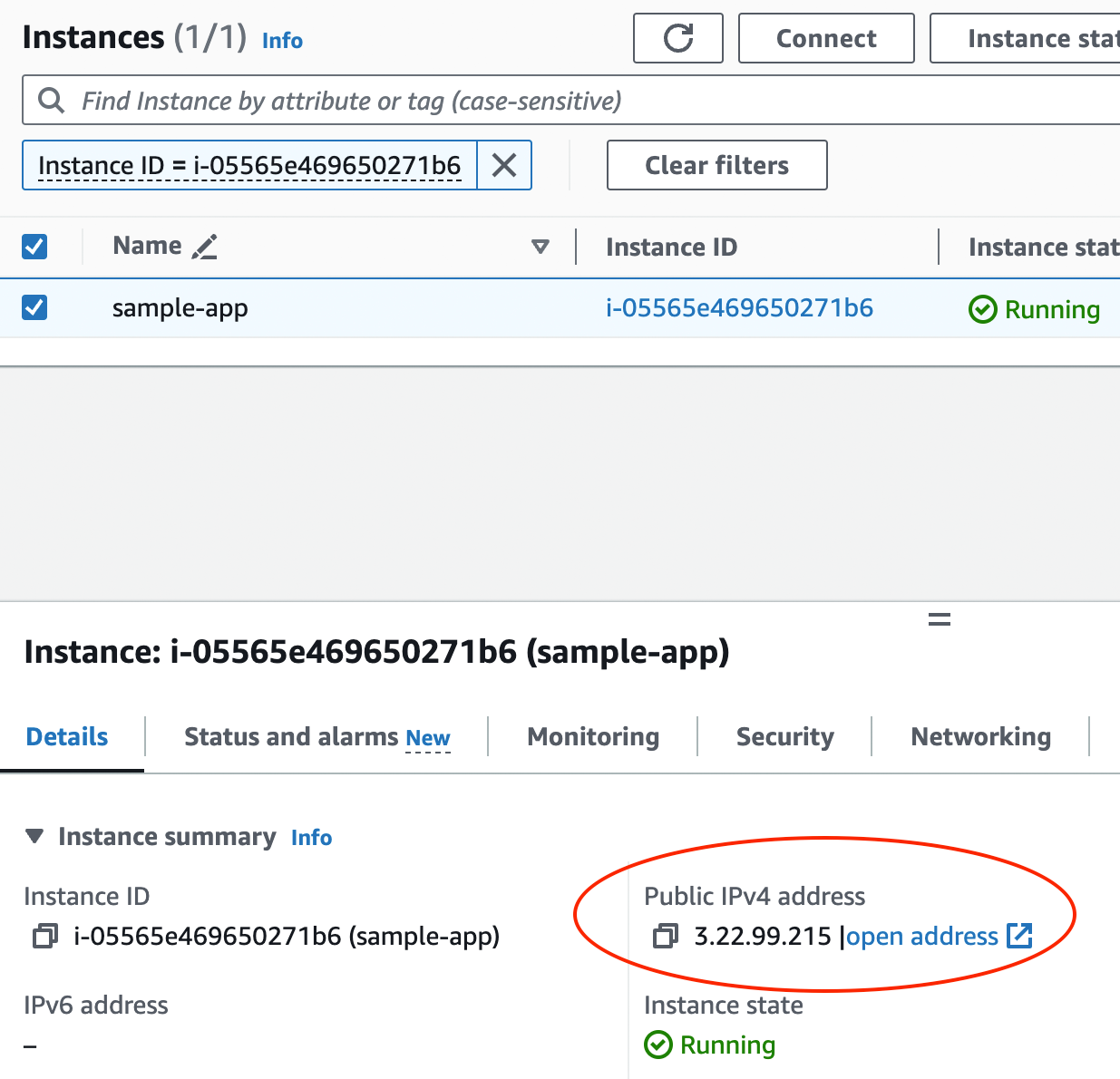

Leave all the other settings at their defaults and click "Launch instance." Once the EC2 instance has been launched,

you should see the ID of the instance on the page (something like i-05565e469650271b6). Click on the ID to go to the

EC2 instances page, where you should see your EC2 instance booting up. Once it has finished booting (you’ll see the

instance state go from "Pending" to "Running"), which typically takes 1-2 minutes, click on the row with your instance,

and in a drawer that pops up at the bottom of the page, you should see more details about your EC2 instance, including

its public IP address, as shown in Figure 17.

Copy and paste that IP address, open http://<IP> in your browser (note: you have to actually type the http://

portion in your browser, or the browser may try to use https:// by default, which will not work; you’ll learn more

about HTTPS in Part 8 [coming soon]), and you should see:

Hello, World!

Congrats, you now have your app running on a server in AWS!

|

Get your hands dirty

Here are a few exercises you can try at home to get a better feel for an IaS such as AWS:

|

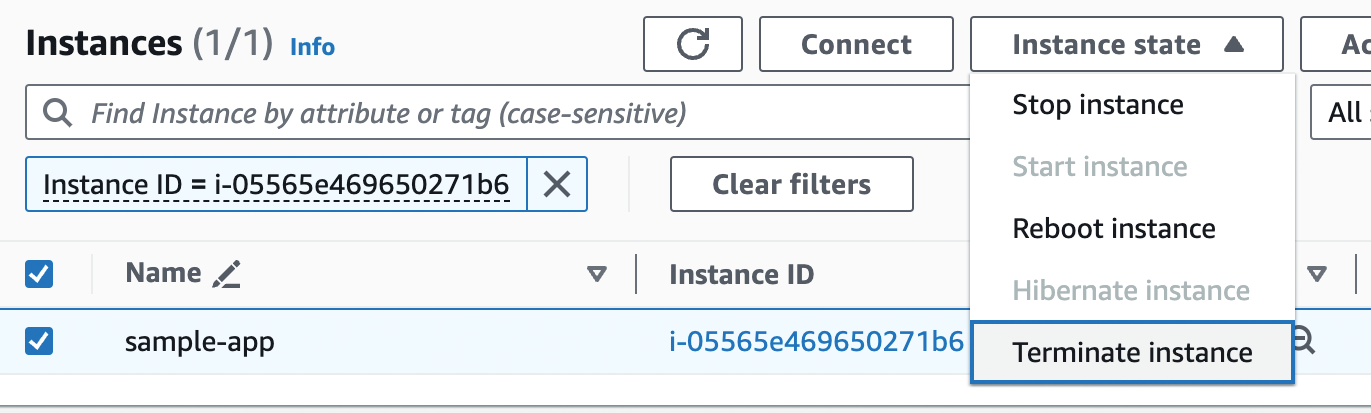

When you’re done experimenting with AWS, you should undeploy your app by selecting your EC2 instance, clicking "Instance state," and choosing "Terminate instance" in the drop down, as shown in Figure 18. This ensures that your account doesn’t start accumulating any unwanted charges.

How IaaS stacks up

Since you’re using IaaS, what you see really is what you get: it’s just a single server. Unlike PaaS, you don’t get multiple servers, TLS certificates and termination, detailed monitoring, automated deployment, and so on out of the box. What you do get is access to all the low-level primitives, so you can build all those parts of the software delivery process yourself, as described in the rest of this blog post series. And that’s both the greatest strength and weakness of IaaS: you have much more control and visibility, so you have fewer limits, can customize things more, and meet a much wider set of requirements; but for those very same reasons, it’s much more work than using a PaaS.

Comparing PaaS and IaaS

Now that you’ve seen both IaaS and PaaS in action, the question is, when should you use one or the other? This is the topic of the next two sections.

When to Go with PaaS

Your customers don’t care what kind of CI / CD pipeline you have, or if you are running a fancy Kubernetes cluster, or if you’re on the newest NoSQL database. All that matters is that you can create a product that meets your customers' needs. Every single thing you do that isn’t absolutely required to create that product is wasteful. This may seem like a strange thing to say in a blog post series about DevOps and software delivery, but if you can create a great product without having to invest much in DevOps and software delivery, that’s a good thing.

|

Key takeaway #5

You should spend as little time on software delivery as you possibly can, while still meeting your company’s requirements. |

If you can find someone else who can take care of software delivery for you, and still meet your requirements, you should take advantage of that as much as possible. And that’s precisely what a good PaaS offers: out-of-the-box software delivery. If you can find a PaaS that meets your requirements, then you should use it, stick with it for as long as you can, and avoid having to recreate all those software delivery pieces until you absolutely have to.

Here are a few of the most common cases where a PaaS is usually the best choice:

- Side projects

-

If you want to put up a website for fun or for a hobby, the last thing you want to do is spend a bunch of time fighting with builds or pipelines or TLS certs. That’s precisely the sort of thing that can kill your passion for your side project. Therefore, use a PaaS, let them do all the heavy lifting, and focus all your time on the side project itself.

- Startups and small companies

-

If you’re building a new company, you should almost always start with a PaaS. In the vast majority of cases, startups live or die based on their product—whether they managed to build something the market wants—and not their software delivery process. Therefore, you typically want to invest as much time as possible into the product, and as little time as possible into the software delivery process. As you saw earlier in this blog post, you can get live on a PaaS in minutes. Moreover, as a startup, your requirements in terms of scalability, availability, security, and compliance are typically fairly minimal, so you can often go for years before you start to run into the limitations of a PaaS. It’s only when you find product/market fit, and start hitting the problem of having to scale your company—which is a good problem to have—that you may need to start thinking of moving off PaaS. But by then, you hopefully have grown large enough to have the time and resources available to invest in building a software delivery process on top of IaaS.

- New and experimental projects

-

Another common use case for PaaS is at established companies that want to try something new or experimental. Often, the software delivery processes within larger companies are slow and inefficient, making it hard to launch something and iterate on it quickly. Using a PaaS for these sorts of new projects can make experimentation far easier. Moreover, the new projects often don’t have the same requirements in terms of scalability, availability, security, and compliance as the company’s older and more mature products, so you are less likely to hit the limitations of a PaaS.

As a general rule, you want to use a PaaS whenever you can, and only move on to IaaS when a PaaS can no longer meet your requirements, as discussed in the next section.

When to Go with IaaS

Here are a few of the most common cases where IaaS is usually the best choice:

- Load

-

If you are dealing with a huge amount of traffic, the pricing of PaaS offerings may become prohibitively expensive. Moreover, even if you could afford the bill, most PaaS offerings only support relatively simple architectures, whereas handling high load & scale may require you to migrate to IaaS to run more complicated systems.

- Company size

-

PaaS offerings can also struggle to deal with larger companies. As you shift from a handful of developers to dozens of teams with hundreds or even thousands of developers, not only can PaaS pricing become untenable, but you may also hit limits in terms of the types of governance and access controls offered by the PaaS: e.g., allowing some teams to make some types of changes, but not others, may be difficult in a PaaS.

- Availability

-

Your business may need to provide uptime guarantees (e.g., service level objectives, or SLAs, a topic you’ll learn more about in Part 10 [coming soon]) that are higher than what your PaaS can provide. Moreover, when there is an outage or a bug, PaaS offerings are often limited in the type of visibility and connectivity options they provide: e.g., many PaaS offerings don’t let you SSH to the server (e.g., this has been a limitation in Heroku for over a decade), which can make debugging a lot harder. As your company and architecture grow larger and more complicated, being able to introspect your systems becomes more and more important, and this may be a reason to go with IaaS over PaaS.

- Security & compliance

-

One of the most common reasons to move off PaaS is to meet security and compliance requirements: e.g., SOC 2, ISO 27001, PCI, HIPAA, GDPR, etc. Although there are a handful of PaaS providers (e.g., Aptible) that can help you meet some types of compliance requirements, most of them do not. Moreover, the general lack of visibility into and control of the underlying systems can make it hard to meet certain security and compliance requirements.

You go with IaaS whenever you need more control, more performance, and/or more security. If your company gets big enough, one or more of these needs will likely push you from PaaS to IaaS—it’s the price of success.

|

Key takeaway #6

Go with PaaS whenever you can; go with IaaS when you have to. |

Conclusion

You now know the basics of deploying your app. Here are the 6 key takeaways from this chapter:

- Adopt the architecture and software delivery processes that are appropriate for your stage of company

-

Always pick the right tools for the job.

- Adopt DevOps incrementally, as a series of small steps, where each step is valuable by itself

-

Avoid big bang migrations and false incrementalism, where you only get value after all steps have been completed.

- You should never expose apps running on a personal computer to the outside world

-

Instead, deploy those apps on a server.

- Using the cloud should be your default choice for most new deployments these days

-

Run your server(s) in the cloud whenever you can. Use on-prem only if you already have an on-prem presence or you have load patterns or compliance requirements that work best on-prem.

- You should spend as little time on software delivery as you possibly can, while still meeting your company’s requirements

-

If you can offload your software delivery to someone else, while still responsibly meeting your company’s requirements, you should.

- Go with PaaS whenever you can; go with IaaS when you have to

-

A PaaS cloud provider lets you offload most of your software delivery needs, so if you can find a PaaS that meets your company’s requirements, you should use it. Only go with an IaaS if your company’s requirements exceed what a PaaS can offer.

One way to summarize these ideas is the concept of minimum effective dose: this is a term from pharmacology, where you try to use the smallest dose of medicine that will give you the biological response you’re looking for. That’s because just about every drug, supplement, and intervention becomes toxic at a high enough dose, so you want to use just enough to get the benefits, and no more. The same is true with DevOps: every architecture, process, and tool has a cost, so you want to use the most simple and minimal solution that gives you the benefits you’re looking for, and no more. Don’t use a fancy architecture or software delivery process if a simpler one will do; instead, always aim for the minimum effective dose of DevOps.

Knowing how to deploy your apps is an important step, but it’s just the first step in understanding DevOps and software delivery. There are quite a few steps left to go. One problem you may have noticed, for example, is that you had to deploy everything in this blog post by clicking around a web UI or manually running a series of CLI commands. Doing things manually is tedious, slow, and error-prone. Imagine if instead of one app, you had to deploy five or fifty; and imagine you had to do that many times per day. Not fun.

The solution is to automate everything by managing all of your infrastructure as code, which is the topic of Part 2, How to Manage Your Infrastructure as Code.

Update, June 25, 2024: This blog post series is now also available as a book called Fundamentals of DevOps and Software Delivery: A hands-on guide to deploying and managing production software, published by O’Reilly Media!