Update, June 25, 2024: This blog post series is now also available as a book called Fundamentals of DevOps and Software Delivery: A hands-on guide to deploying and managing production software, published by O’Reilly Media!

This is Part 4 of the Fundamentals of DevOps and Software Delivery series. In Part 2 and Part 3, you wrote code using a variety of languages and tools, including JavaScript (Node.js), Bash, YAML (Ansible), HCL (OpenTofu, Packer), and so on. What did you do with all of that code? Is it just sitting on your computer? If so, that’s fine for learning and experimenting, where you’re the only one touching that code, but with most real-world code, software development is a team sport, and not a solo effort. Which means you need to figure out how to support many developers collaborating safely and efficiently on the same codebase.

In particular, you need to figure out how to solve the following problems:

- Code access

-

All the developers on your team need a way to access the same code so they can all collaborate on it.

- Integration

-

As multiple developers make changes to the same code base, you need some way to integrate their changes, handling any conflicts that arise, and ensuring that no one’s work is accidentally lost or overwritten.

- Correctness

-

It’s hard enough to make your own code work, but when multiple people are modifying that code at the same time, you need to find a way to prevent constant bugs and breakages from slipping in.

- Release

-

Getting a codebase working is great, but as you may remember from the Preface, code isn’t done until it’s generating value for your users and your company, which means you need a way to release the changes in your codebase to production on a regular basis.

These problems are all key parts of your software development life cycle (SDLC). In the past, many companies would come up with their own ad-hoc, manual SDLC processes: I’ve seen places where the developers would email code changes back and forth, spend weeks integrating changes together manually, test everything manually (if they did any testing at all), and release everything manually (e.g., by using FTP to upload code to a server). But just because you can do something doesn’t mean you should.

These days, we have far better tools and techniques for solving these problems. In this post, you’ll learn a few of these tools and techniques; in the next post, you’ll learn how to put these tools and techniques together into an effective SDLC—the kind of SDLC that makes it possible to achieve world-class results like the companies mentioned in Section 1.1, who are able to deploy thousands of times per day.

Here’s what we’ll cover in this post:

-

Version control

-

Build system

-

Automated testing

Let’s get started by understanding what version control is, why it’s important, and the best practices for using it.

Version Control

A version control system (VCS) is a tool that allows you to store source code, share it with your team, integrate your work together, and track changes over time. I cannot imagine developing software without using a VCS. It’s a central part of every modern SDLC. And there’s nothing modern about it: nearly 25 years ago, using version control was item #1 on the Joel Test (a quick test you can use to rate the quality of a software team), and the first version control system was developed roughly 50 years ago.

Despite all that, I still come across a surprising number of developers who don’t know how or why to use version control. If you’re one of these developers, it’s nothing to be ashamed of, and you’ll find that if you take a small amount of time to learn it now, it’s one of those skills that will benefit you for years to come.

Here’s what the next few sections will cover:

- Version control primer

-

A quick intro to version control concepts.

- Example: a crash course on Git

-

Get hands-on practice using version control by learning to work with Git.

- Example: store your code in GitHub

-

Get even more hands-on practice using version control by learning to push your Git repo to GitHub.

- Version control best practices

-

Learn how to write good commit messages, keep your codebase consistent, review code to catch bugs and spread knowledge, and ensure your version control system is protected against common security threats.

If you’re already familiar with version control and Git, feel free to skip the next two sections. However, I recommend you still go through the third section with the GitHub example, as many of the subsequent examples in the blog post series build on top of GitHub (e.g., using GitHub actions for CI / CD in Part 5).

Version Control Primer

Have you ever written an essay in Microsoft Word? Maybe you start with a file called essay.doc, but then you realize you need to do some pretty major changes, so you create essay-v2.doc; then you decide to remove some big pieces, but you don’t want to lose them, so you put those in essay-backup.doc, and move the remaining work to essay-v3.doc; maybe you work on the essay with your friend Anna, so you email her a copy, and she starts making edits; at some point, she emails you back the doc with her updates, which you then manually combine with the work you’ve been doing, and save that under the new name essay-v4-anna-edit.doc; you keep emailing back and forth, and you keep renaming the file, until minutes before the deadline, you finally submit a file called something like essay-final-no-really-definitely-final-revision3-v58.doc.

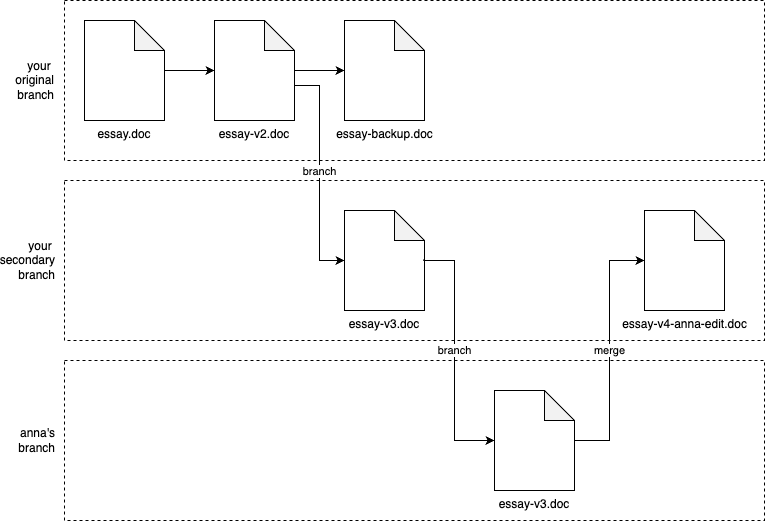

Believe it or not, what you’re doing is essentially version control. You could represent it with the diagram shown in Figure 30:

You start with essay.doc, and after some major edits, you commit your changes to a new revision called essay-v2.doc. Then, you realize that you need to break off in a new direction, so you could say that you’ve created a new branch from you original work, and in that new branch, you commit another new revision called essay-v3.doc. When you email Anna essay-v3.doc, and she starts her work, you could say that she’s working in yet another branch. When she emails you back, you manually merge the work in your branch and her branch together to create essay-v4-anna-edit.doc.

What you’ve just seen is the essence of version control: commits, branches, and merges. Admittedly, manually emailing and renaming Word docs isn’t a very good version control system, but it is version control!

A better solution would be to use a first-class VCS, which can perform these operations more effectively. The basic idea with a VCS is as follows:

- Repositories

-

You store files (code, documents, images, etc.) in a repository (repo for short).

- Branches

-

You start with everything in a single branch, often called something like

main. At any time, you can create a new branch from any existing branch, and work in your own branch independently. - Commits

-

Within any branch, you can edit files, and when you’re ready to store your progress in a new revision, you create a commit with your updates. The commit typically records not only the changes to the files, but also who made the changes, and a commit message that describes the changes.

- Merges

-

At any time, you can merge branches together. For example, it’s common to create a branch from

main, work in that branch for a while, and then merge your changes back intomain. - Conflicts

-

VCS tools can merge some types of changes completely automatically, but if there is a conflict (e.g., two people changed the same line of code in different ways), the VCS will ask you to resolve the conflict manually.

- History

-

The VCS tracks every commit in every branch in a commit log, which lets you see the full history of how the code changed, including all previous revisions of every file, what changed between each revision, and who made each change.

Learning about all these terms and concepts is useful, but really, the best way to understand version control is to try it out, as in the next section.

Example: A Crash Course on Git

There have been many version control systems developed over the years, including CVS, Subversion, Perforce, and Mercurial, but these days, the most popular, by far, is Git. According to the 2022 StackOverflow Developer Survey, 93% of developers use Git (96% if you look solely at professional developers). So if you’re going to learn one VCS, it should be Git.

Git basics

If you don’t have it already installed, follow these docs to install Git on your computer. Next, let Git know your name and email:

$ git config --global user.name "<YOUR NAME>"

$ git config --global user.email "<YOUR EMAIL>"Create a new, empty folder on your computer just for experimenting with Git. For example, you could create a folder called git-practice within the system temp folder:

$ mkdir /tmp/git-practice

$ cd git-practiceWithin the git-practice folder, write some text to a file called example.txt:

$ echo 'Hello, World!' > example.txtIt would be nice to have some version control for this file. With Git, that’s easy. You can turn any folder into a Git

repo by running git init:

$ git init

Initialized empty Git repository in /tmp/git-practice/.git/The contents of your folder should now look something like this:

$ tree -aL 1

.

├── .git

└── example.txtYou should see your original example.txt file, plus a new .git folder. This .git folder is where Git will record all the information about your branches, commits, revisions, and so on.

At any time, you can run the git status command to see the status of your repo:

$ git status

On branch main

No commits yet

Untracked files:

(use "git add <file>..." to include in what will be committed)

example.txtThe status command is something you’ll run often, as it gives you several useful pieces of information: what branch

you’re on (main is the default branch when you create a new Git repo); any commits you’ve made; and any changes

that haven’t been committed yet. To commit your changes, you first need to add the file(s) you want to commit to the

staging area using git add:

$ git add example.txtTry running git status one more time:

$ git status

On branch main

No commits yet

Changes to be committed:

(use "git rm --cached <file>..." to unstage)

new file: example.txtNow you can see that example.txt is in the staging area, ready to be committed. To commit the changes, use the

git commit command, passing in a description of the commit via the -m flag:

$ git commit -m "Initial commit"Git is now storing example.txt in its commit log. You can see this by running git log:

$ git log

commit 7a536b3367981b5c86f22a27c94557412f3d915a (HEAD -> main)

Author: Yevgeniy Brikman

Date: Sat Apr 20 16:01:28 2024 -0400

Initial commitFor each commit in the log, you’ll see the commit ID, author, date, and commit message. Take special note of the commit ID: each commit has a different ID that you can use to uniquely identify that commit, and many Git commands take a commit ID as an argument. Under the hood, a commit ID is calculated by taking the SHA-1 hash of the contents of the commit, all the commit metadata (author, date, and so on), and the ID of the previous commit.[24] Commit IDs are 40 characters long, but in most commands, you can use just the first 7 characters, as that will be unique enough to identify commits in all but the largest repos.

Let’s make another commit. First, make a change to example.txt:

$ echo 'New line of text' >> example.txtThis adds a second line of text to example.txt. Run git status once again:

$ git status

On branch main

Changes not staged for commit:

(use "git add <file>..." to update what will be committed)

(use "git restore <file>..." to discard changes in working directory)

modified: example.txtNow Git is telling you that it sees changes locally, but these changes have not been added to the staging area. To see

what these changes are, run git diff:

$ git diff

diff --git a/example.txt b/example.txt

index 8ab686e..3cee8ec 100644

--- a/example.txt

+++ b/example.txt

@@ -1 +1,2 @@

Hello, World!

+New line of textYou should use git diff frequently to check what changes you’ve made before committing them. If the changes look good,

use git add to stage the changes and git commit to commit them:

$ git add example.txt

$ git commit -m "Add another line to example.txt"Now, try git log once more, this time adding the --oneline flag to get more concise output:

$ git log --oneline

02897ae (HEAD -> main) Add another line to example.txt

0da69c2 Initial commitNow you can see both of your commits, including their commit messages. This commit log is very powerful:

- Debugging

-

If something breaks in your code, the first question you typically ask is, "what changed?" The commit log gives you an easy way to answer that question.

- Reverting

-

You can use

git revert <ID>to automatically create a new commit that reverts all the changes in the commit with ID<ID>, thereby undoing the changes in that commit, while still preserving your Git history. Or you can usegit reset --hard <ID>to get rid of all commits after<ID>entirely, including removing them from history (use with care!). - Comparison

-

You can use

git diffto compare not only local changes, but also to compare any two commits. This lets you see how the code evolved, one commit at a time. - Author

-

You can use

git blameto annotate each line of a file with information about the last commit that modified that file, including the date, the commit message, and the author. Although you could use this to blame someone for causing a bug, as the name implies, the more common use case is to help you understand where any give piece of code came from, and why that change was made.

So far, all of your commits have been on the default branch (main), but in real-world usage, you typically do work

across multiple branches, which is the focus of the next section.

Git branching and merging

Let’s practice creating and merging Git branches. To create a new branch and switch to it, use the git checkout

command with the -b flag:

$ git checkout -b testing

Switched to a new branch 'testing'To see if it worked, you can use git status, as always:

$ git status

On branch testing

nothing to commit, working tree cleanYou can also use the git branch command at any time to see what branches are available and which one you’re on:

$ git branch

main

* testingAny changes you commit now will go into the testing branch. To try this out, modify example.txt once again:

$ echo 'Third line of text' >> example.txtNext, stage and commit your changes:

$ git add example.txt

$ git commit -m "Added a 3rd line to example.txt"If you use git log, you’ll see your three commits:

$ git log --oneline

5b1a597 (HEAD -> testing) Added a 3rd line to example.txt

02897ae (main) Add another line to example.txt

0da69c2 Initial commitBut that third commit is only in the testing branch. You can see this by using git checkout to switch back to the

main branch:

$ git checkout main

Switched to branch 'main'Check the contents of example.txt: it’ll have only two lines. And if you run git log, it’ll have only two commits.

So each branch gives you a copy of all the files in the repo, and you can modify them in that branch, in isolation, as

much as you want, without affecting any other branches.

Of course, working forever in isolation doesn’t usually make sense. You eventually will want to merge your work back

together. One way to do that with Git is to run git merge to merge the contents of the testing branch into the

main branch:

$ git merge testing

Updating c4ff96d..c85c2bf

Fast-forward

example.txt | 1 +

1 file changed, 1 insertion(+)You can see that Git was able to merge all the changes automatically, as there were no conflicts between the main and

testing branches. If you now check the contents of example.txt, it should have three lines in it, and if you run

git log, you’ll see three commits.

|

Get your hands dirty

Here are a few exercises you can try at home to get a better feel for using Git:

|

Now that you know how to run Git locally, on your own computer, you’ve already learned something useful: if nothing else, you now have a way to store revisions of your work that’s much more effective than essay-v2.doc, essay-v3.doc, etc. But to see the full power of Git, you’ll want to use it with other developers, which is the focus of the next section.

Example: Store your Code in GitHub

Git is a distributed VCS, which means that every team member can have a full copy of the repository, and do commits, merges, and branches, completely locally. However, the most common way to use Git is to pick one copy of the repository as the central repository that will act as your source of truth. This central repo is the one everyone will initially get their code from, and as you make changes, you always push them back to this central repo.

There are many ways to run such a central repo: the most common approach is to use a hosting service. These not only host Git repos for you, but they also provide a number of other useful features, such as web UIs, user management, development workflows, issue tracking, security tools, and so on. The most popular hosting services for Git are GitHub, GitLab, and BitBucket. Of these, GitHub is the most popular by far.

In fact, you could argue that GitHub is what made Git popular. GitHub provided a great experience for hosting repos and collaborating with team members, and it has become the de facto home for most open source projects. So if you wanted to use or participate in open source, you often had to learn to use Git, and before you knew it, Git and GitHub were the dominant players in the market. Therefore, it’s a good idea to learn to use not only Git, but GitHub as well.

Let’s push the example code you’ve worked on while reading this blog post series to GitHub. Go into the folder where you have your code:

$ cd fundamentals-of-devopsIf you’ve done all the examples up to this point, the contents of this folder should look something like this:

$ tree -L 2

.

├── ch1

│ ├── ec2-user-data-script

│ └── sample-app

├── ch2

│ ├── ansible

│ ├── bash

│ ├── packer

│ └── tofu

└── ch3

├── ansible

├── docker

├── kubernetes

├── packer

└── tofuTurn this into a Git repo by running git init:

$ git init

Initialized empty Git repository in /fundamentals-of-devops/.git/If you run git status, you’ll see that there are no commits and no files staged for commit:

$ git status

On branch main

No commits yet

Untracked files:

(use "git add <file>..." to include in what will be committed)

ch1/

ch2/

ch3/Before staging these files, you should create a new file in the root of the repo called .gitignore, with the contents shown in Example 63:

(1)

*.tfstate

*.tfstate.backup

*.tfstate.lock.info

(2)

.terraform

(3)

*.key

(4)

*.zip

(5)

node_modules

coverageThe .gitignore file specifies files you do not want Git to track:

| 1 | OpenTofu state should not be checked into version control. You’ll learn why not, and the proper way to store state files in Part 5. |

| 2 | The .terraform folder is used by OpenTofu as a scratch directory and should not be checked in. |

| 3 | The Ansible examples in earlier blog posts store SSH private keys locally in .key files. These are secrets, so they should be stored in an appropriate secret store (as you’ll learn about in Part 8 [coming soon]), and not in version control. |

| 4 | The lambda module from Part 3 creates a Zip file automatically. This is a

build artifact and should not be checked in. |

| 5 | The node_modules and coverage folders are also scratch directories that should not be checked in. You’ll learn more about these folders later in this post. |

I usually commit the .gitignore file first to ensure I don’t accidentally commit other files that don’t belong in version control:

$ git add .gitignore

$ git commit -m "Add .gitignore"With that done, you can use git add . to stage all the files and folders in your fundamentals-of-devops folder, and

then commit them:

$ git add .

$ git commit -m "Example code for first few chapters"The code is now in a Git repo on your local computer. Let’s now push it to a Git repo on GitHub.

If you don’t have a GitHub account already, sign up for one now (it’s free), and follow the authenticating on the command line docs to learn to authenticate to GitHub from your terminal.

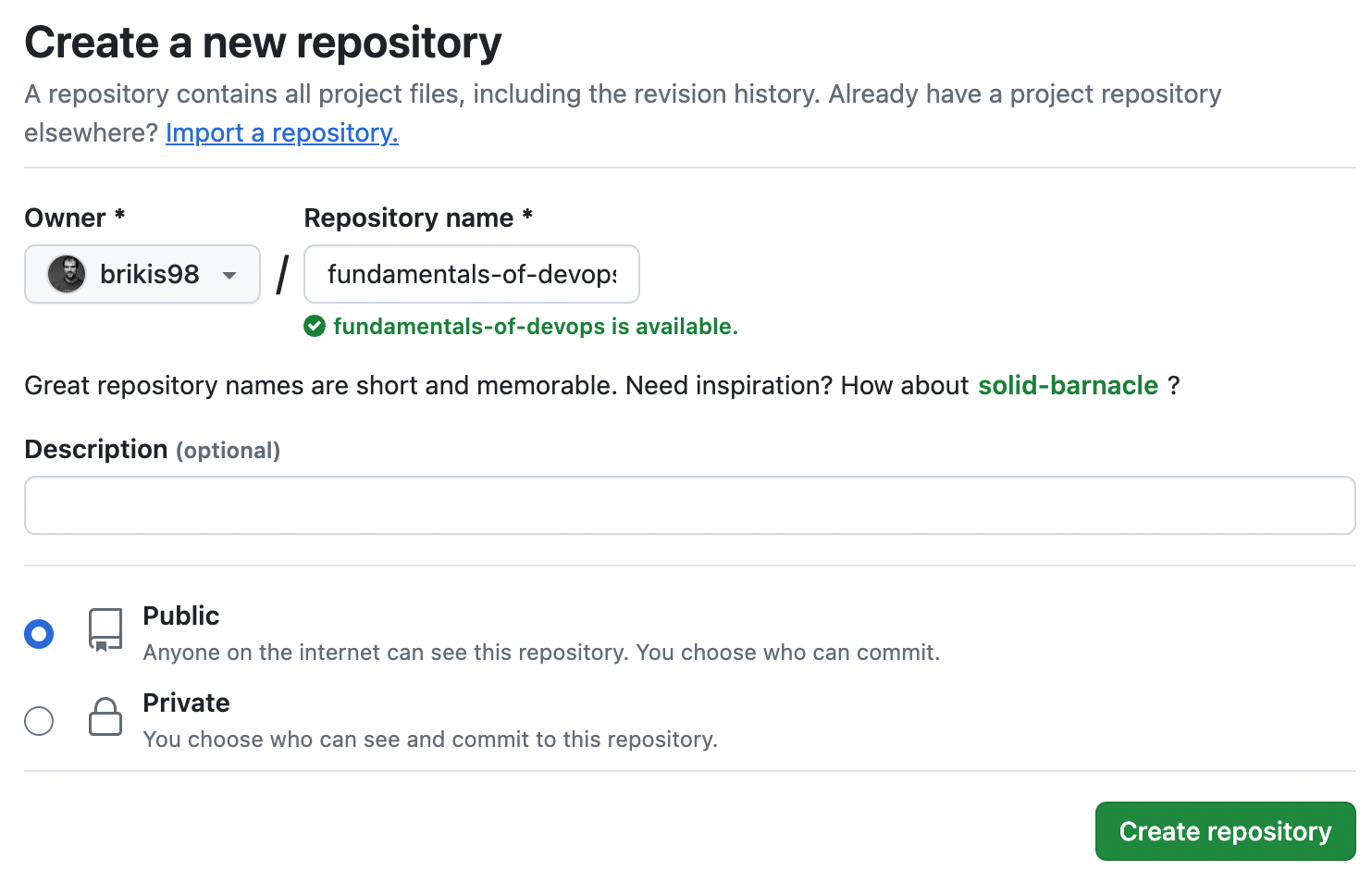

Next, create a new repository in GitHub, give it a name, make the repo public, and click "Create repository," as shown in Figure 31:

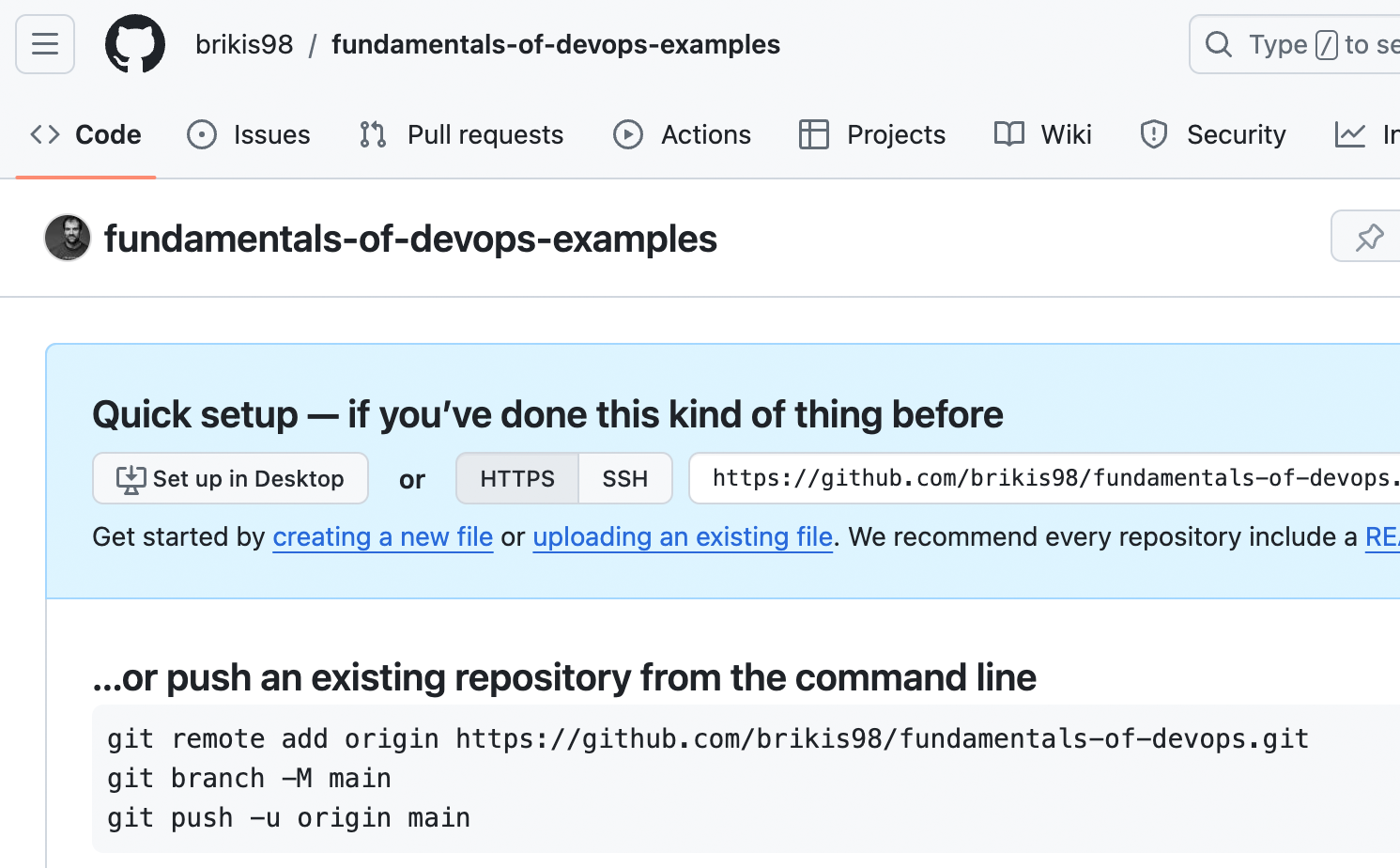

This will create a new, empty repo for you that looks something like Figure 32:

Copy and paste the git remote add command from that page to add a new remote, which is a Git repository hosted

remotely (i.e., somewhere on the Internet):

$ git remote add origin https://github.com/<USERNAME>/<REPO>.gitThe preceding command adds your GitHub repo as a remote named origin: you can name remotes whatever you want, but

origin is the convention for your team’s central repo, so you’ll see that used all over the place.

Now you can use git push <REMOTE> <BRANCH> to push the code from branch <BRANCH> of your local repo to the remote

named <REMOTE>. So to push your main branch to the GitHub repo you just created, you’d run the following:

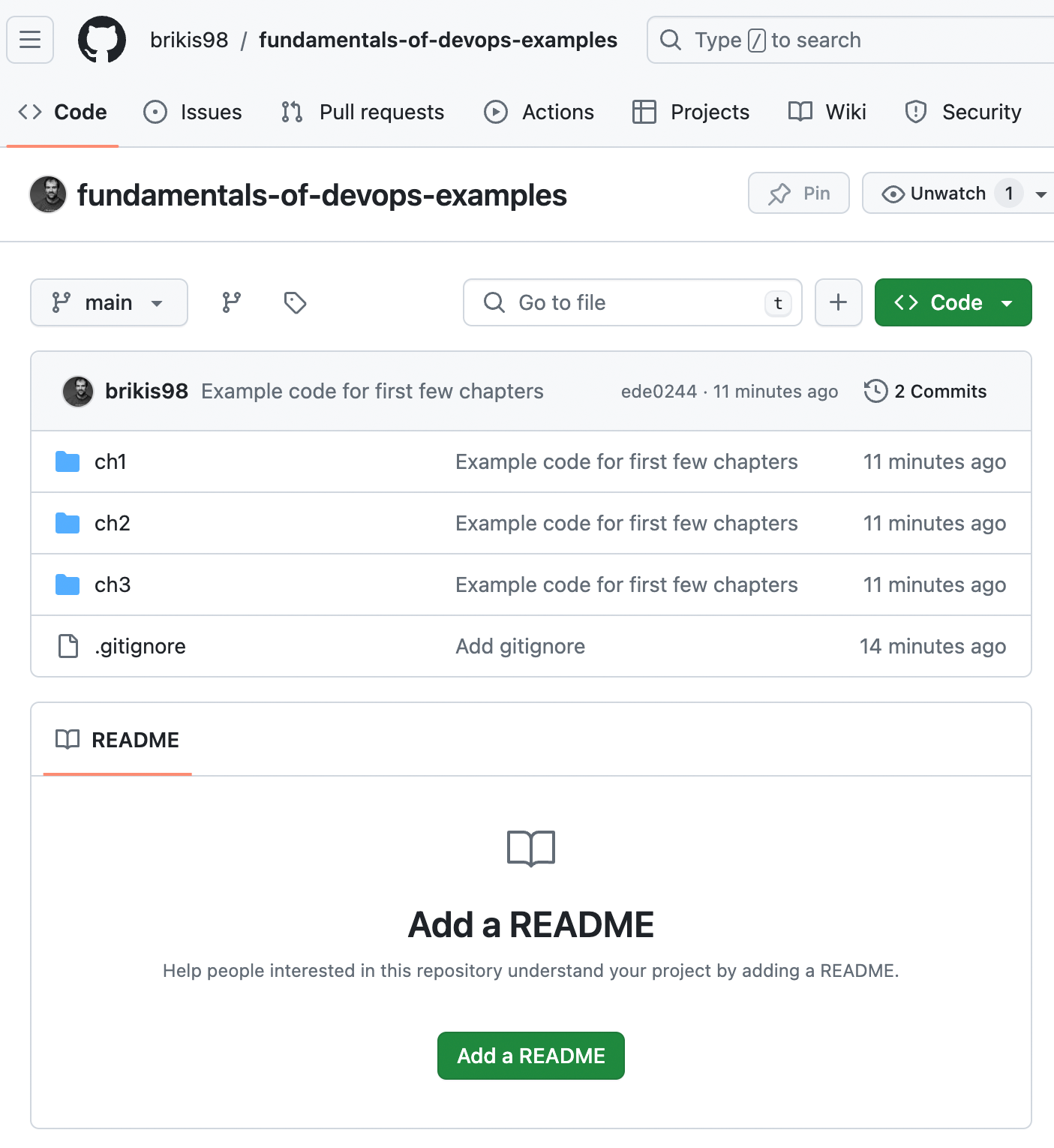

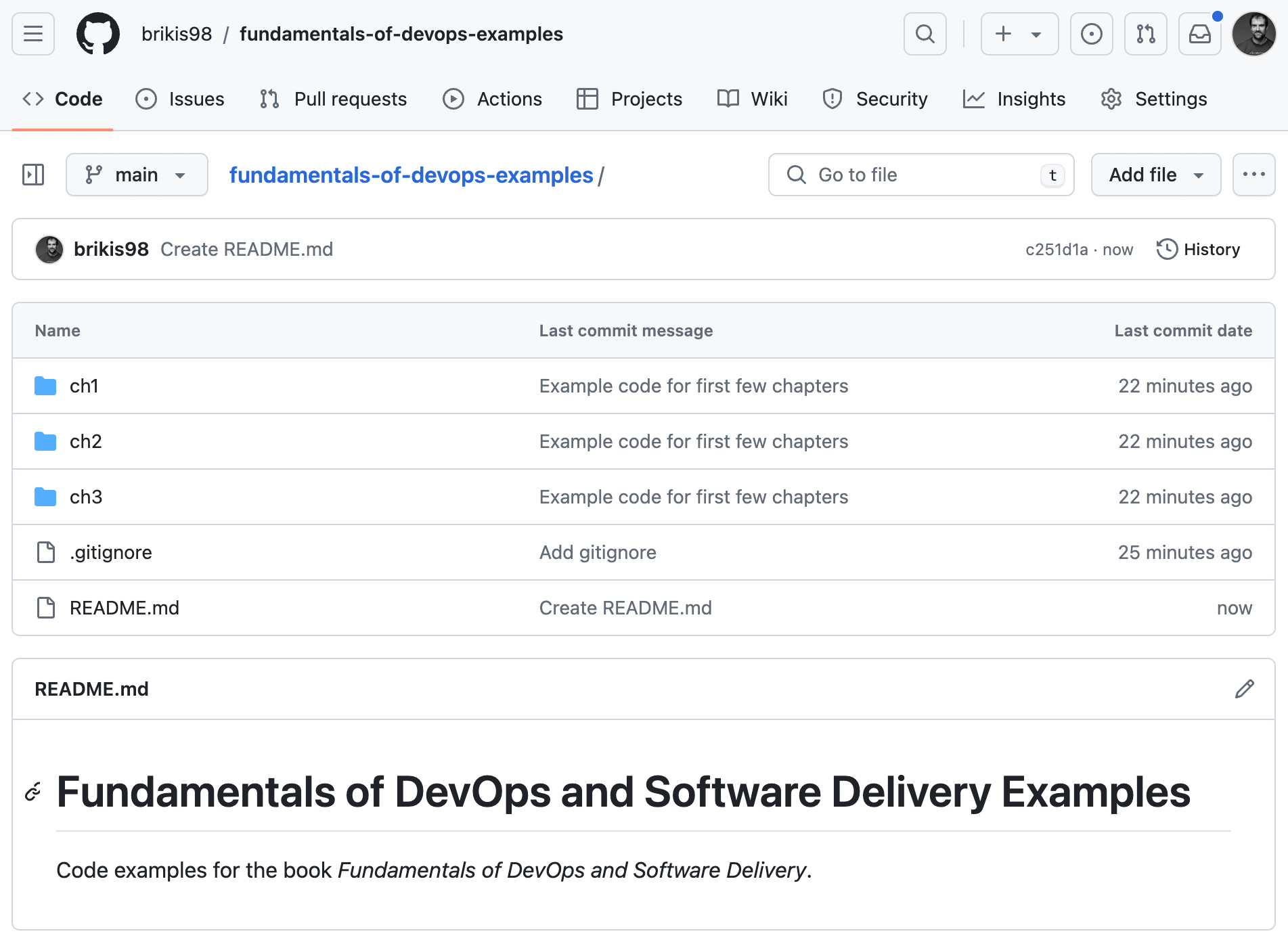

$ git push origin mainIf you refresh your repo in GitHub, you should now see your code there, as shown in Figure 33:

Congrats, you just learned how to push your changes to a remote endpoint, which gets you halfway there with being able to collaborate with other developers. Now it’s time to learn the other half, which is how to pull changes from a remote endpoint.

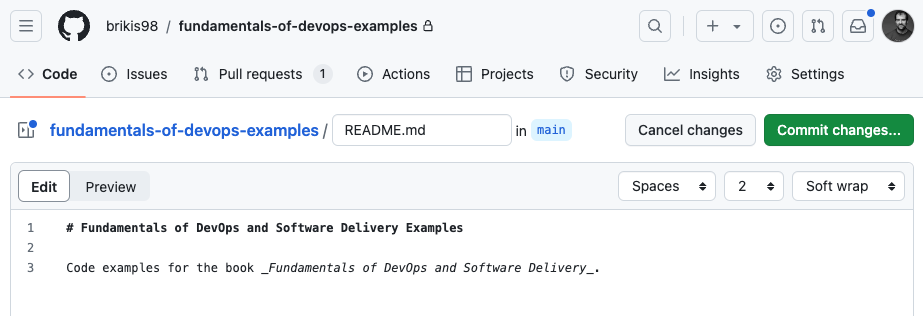

Notice how GitHub prompts you to "Add a README" to your new repo. Adding documentation for your code is always a good idea, so let’s do it. Click the green Add a README button, and you’ll get a code editor in your browser where you can write the README in Markdown, as shown in Figure 34:

Fill in a reasonable description for the repo and then click the "Commit changes…"

button. GitHub will prompt you for a commit message, so fill one in just like the -m flag on the command-line, and

click the "Commit changes" button. GitHub will commit the changes and then take you back to your repo, where you’ll be

able to see your README, as shown in Figure 35:

Notice that the repo in GitHub now has a README.md, but the copy on your own computer doesn’t. To get the latest code

onto your computer, run the git pull <REMOTE> <BRANCH> command, where <REMOTE> is the name of the remote to pull

from, and <BRANCH> is the branch to pull:

$ git pull origin mainThe log output should show you that Git was able to pull the changes in and a summary of what changed, which should include the new README.md file. Congrats, you now know how to pull changes from a remote endpoint!

Note that if you didn’t have a copy of the repo locally on your computer at all, you couldn’t just run git pull.

Instead, you first need to use git clone to checkout the initial copy of the repo (note: you don’t actually need to

run this command, as you have a copy of this repo already, but just be aware of this for working with other repos

in the future):

$ git clone https://github.com/<USERNAME>/<REPO>When you run git clone, Git will check out a copy of the repo <REPO> to a folder called <REPO> in your current

working directory. It’ll also automatically add the repo’s URL as a remote named origin.

So now you’ve seen the basic Git workflows:

-

git clone: Check out a fresh copy of a repo. -

git push origin <BRANCH>: Push changes from your local repo back to the remote repo, so all your other team members can see your work. -

git pull origin <BRANCH>: Pull changes from the remote repo to your local repo, so you can see the work of all your other team members.

This is the basic workflow, but what you’ll find is that many teams use a slightly different workflow to push changes: pull requests. This is the topic of the next section.

Example: Open a Pull Request in GitHub

A pull request (PR) is a request to merge one branch into another: in effect, you’re requesting that someone else

runs git pull on your repo/branch. GitHub popularized the PR workflow as the de facto way to make changes to open

source repos, and these days, many companies use PRs to make changes to private repos as well. The pull request

process is as follows:

-

You check out a copy of repo

R, create a branchB, and commit your changes to this branch. Note that if you have write access to repoR, you can create branchBdirectly in repoR. However, if you don’t have write access, which is usually the case if repoRis an open source repo in someone else’s account, then you first create a fork of repoR, which is a copy of the repo in your own account, and then you create branchBin your fork. -

When you’re done with your work in branch

B, you open a pull request against repoR, requesting that the maintainer of that repo merges your changes from branchBinto some branch in repoR(typicallymain). -

The owner of repo

Rthen uses GitHub’s PR UI to review your changes, provide comments and feedback, and ultimately, decide to either merge the changes in, or close the PR unmerged.

Let’s give it a shot. Create a new branch called update-readme in your repo:

$ git checkout -b update-readmeMake a change to the README.md file: for example, add a URL to the end of the file. Run git diff to see what your

changes look like:

$ git diff

diff --git a/README.md b/README.md

index 843bd01..ad75c5e 100644

--- a/README.md

+++ b/README.md

@@ -1,3 +1,5 @@

# Fundamentals of DevOps and Software Delivery Examples

Code examples for the book _Fundamentals of DevOps and Software Delivery_.

+

+https://www.fundamentals-of-devops.com/If the changes look good, add and commit them:

$ git add README.md

$ git commit -m "Add URL to README"Next, push your update-readme branch to the remote repo:

$ git push origin update-readmeYou should see log output that looks something like this:

remote: remote: Create a pull request for 'update-readme' on GitHub by visiting: remote: https://github.com/<USERNAME>/<REPO>/pull/new/update-readme remote:

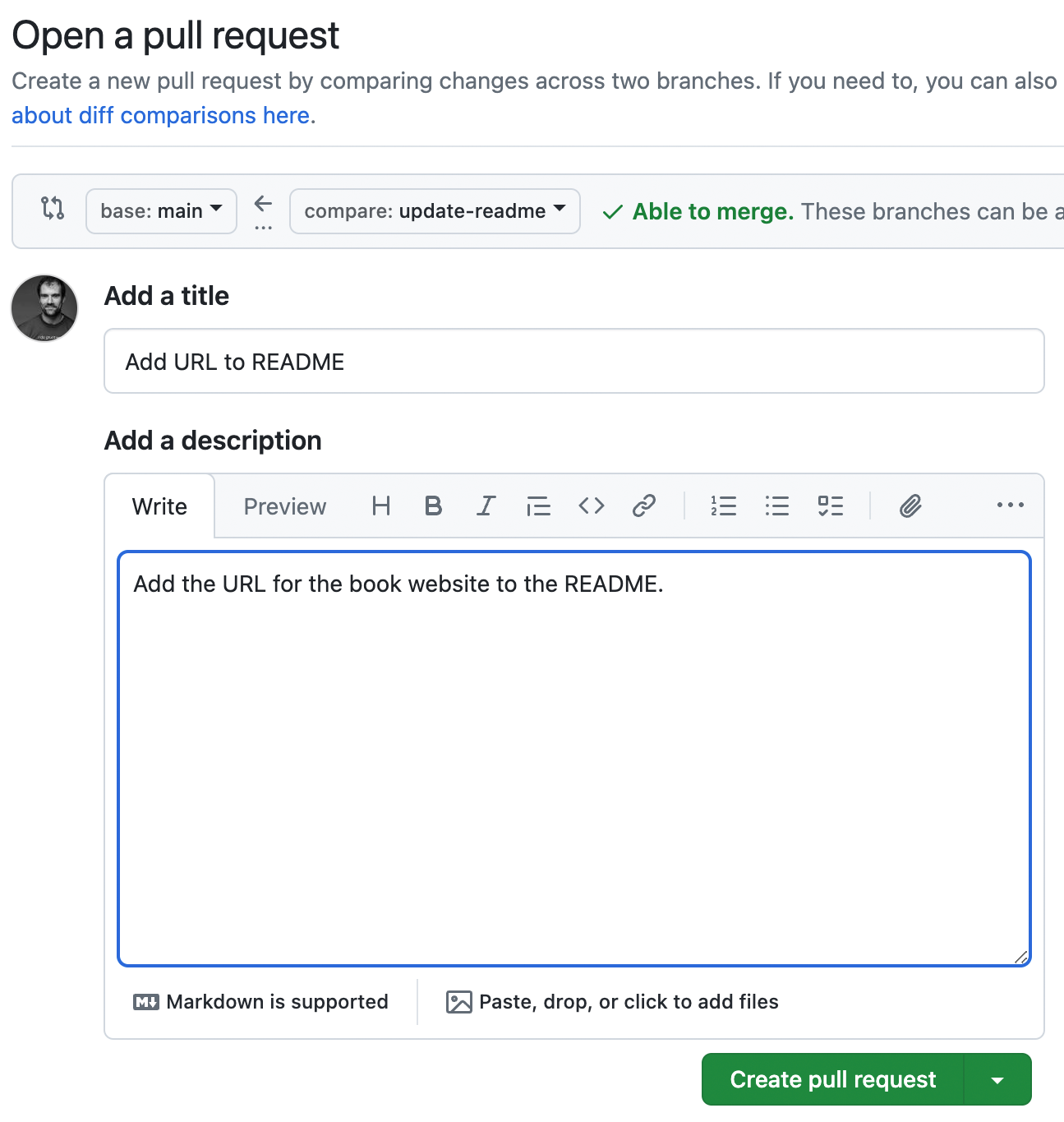

In the log output, GitHub conveniently shows you a URL for creating a pull request (you can also create PRs by going to the Pull Requests tab of your repo in a web browser and clicking the New Pull Request button). Open that URL in your web browser, and you should see a page that looks like Figure 36:

Fill in a title and description for the PR, then scroll down to see the changes between your branch and main,

which should be the same ones you saw when running git diff. If those changes look OK, click the "Create pull request"

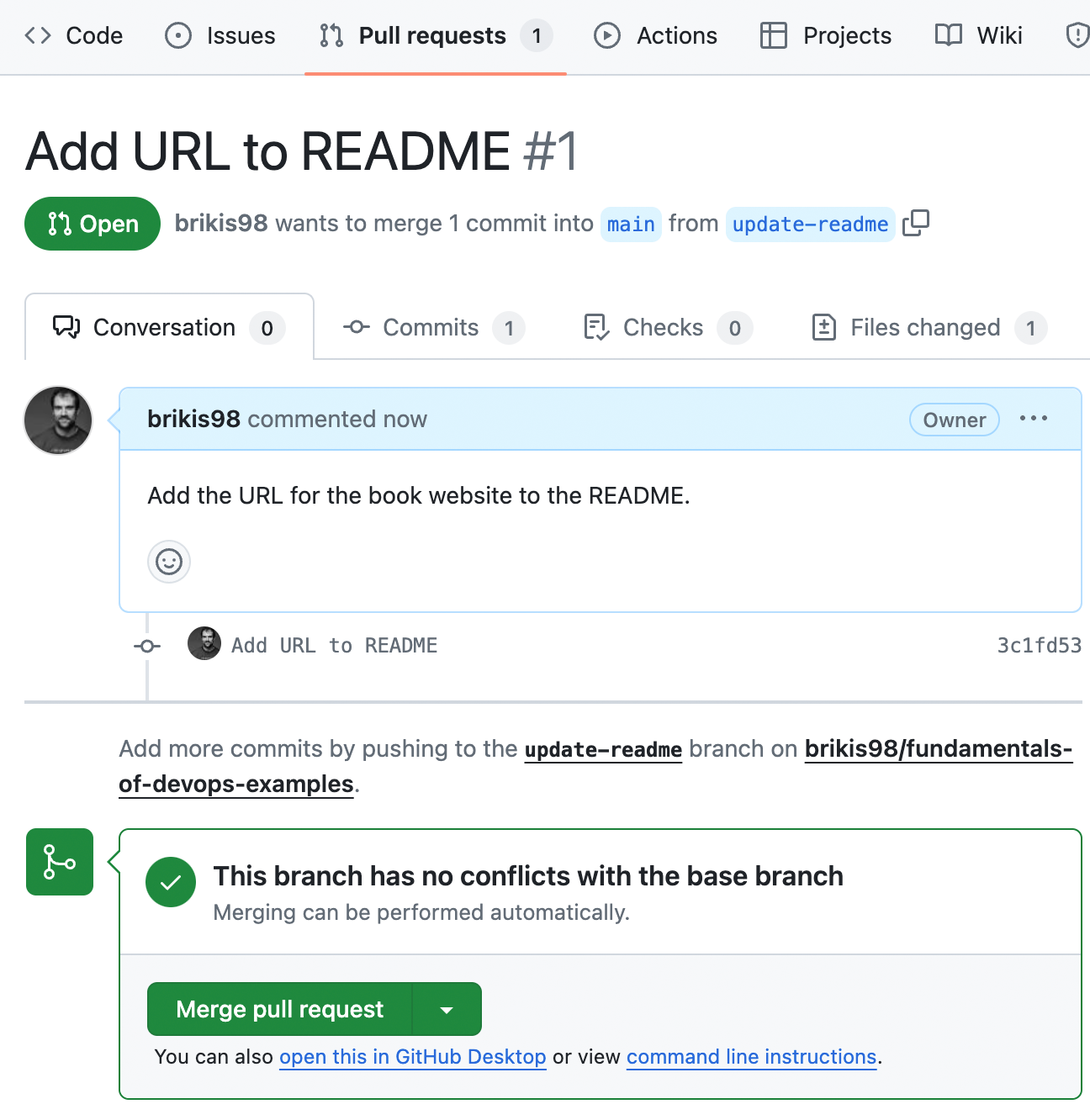

button, and you’ll end up on the GitHub PR UI, as shown in Figure 37:

You and all your team members can use this PR page to see the code changes (try clicking the "Files changed" tab

to get a view similar to git diff), discuss the changes, request reviewers, request changes, and so on. All of this

discussion gets stored in GitHub, so if later on, you’re trying to debug an issue or figure out why some code is the way

it is, these PR pages serve as an invaluable source of history and context. As you’ll see in Part 5,

this is also the place where a lot of the CI / CD integration will happen.

If the PR looks good, click "Merge pull request," and then "Confirm merge," to merge the changes in.

Version Control Best Practices

Now that you understand the basics of version control, let’s discuss the best practices:

-

Always use version control

-

Write good commit messages

-

Commit early and often

-

Use a code review process

-

Protect your code

Always use version control

The single most important best practice with version control is: use it.

|

Key takeaway #1

Always manage your code with a version control system. |

It’s easy, it’s cheap or free, and the benefits for software engineering are massive. If you’re writing code, always store that code in version control. No excuses.

Write good commit messages

Commit messages are important. When you’re trying to figure out what caused a bug or an outage, or staring at a

confusing piece of code, git log and git blame can be your best friends—but only if the commit messages are well

written. Good commit messages consist of two ingredients:

- Summary

-

The first line of the message should be a short (less than 50 characters), clear summary of the change.

- Context

-

If it’s a trivial change, a summary line can be enough, but for anything larger, put a new line after the summary line, and then provide more information to help future coders (including a future you!) understand the context. In particular, focus on what changed and why it changed, which is context that you can’t always get just by reading the code, rather than how, which should be clear from the code itself.

Here’s an example of such a commit message:

Fix bug with search auto complete A more detailed explaination of the fix, if necessary. Provide additional context that may not be obvious from just reading the code. - Use bullet points - If appropriate Fixes #123. Jira #456.

Just adding these two simple ingredients, summary and context, will make your commit messages already considerably better. If you’re able to follow these two rules consistently, you can level up your commit messages even further by following the instructions in How to Write a Good Commit Message. And for especially large projects and teams, you can also consider adopting Conventional Commits as a way to enforce even more structure in your commit messages.

Commit early and often

One of the keys to being a more effective programmer is learning how to take a large problem and break it down into small, manageable parts. As it turns out, one of the keys to using version control more effectively is learning to break down large changes into small, frequent commits.

In fact, there are two ideals to aim for:

- Atomic commits

-

Each commit should do exactly one, small, relatively self-contained thing. You should be able to describe that thing in one short sentence—which, conveniently, you can use as the commit message summary. If you can’t fit everything into one sentence, then the commit is likely doing too many things at once, and should be broken down into multiple commits. For example, instead of a single, massive commit that implements an entire large feature, aim for a series of smaller commits, where each one implements some logical slice of that feature: e.g., the backend logic in one commit, the UI logic in another commit, the search logic in another commit, and so on.

- Atomic PRs

-

Each pull request does exactly one small, relatively self-contained thing. A single PR can contain multiple commits, so it’ll naturally be larger in scope than any one commit, but it should still represent a single set of cohesive changes: that is, changes that naturally and logically go together. If you find that your PR contains a list of somewhat unrelated changes, that’s usually a sign you should break it up into multiple PRs. A classic example of this is a PR that contains a new feature, but along the way, the author of the PR also fixed a couple bugs they came across, and refactored a bunch of code. Leaving the code cleaner than how you found it (the Boy Scout Rule) is a good idea, but for the sanity of the developers who will be reviewing your PR and the commit log, put each of these changes into its own PR: that is, the refactor should go in one PR, the bug fixes each in their own PRs, and finally, the new feature in its own PR.

Atomic commits and atomic PRs are ideals, and sometimes you’ll fall short of them, but it’s always a good idea to strive towards these ideals, as they give you a number of benefits:

- More useful Git history

-

When you’re scanning your Git history, commits and PRs that do one thing are easier to understand than those that do many things.

- Less risk

-

A commit or PR that makes one atomic change is typically less risky, as it’s often self-contained enough that you could revert it, whereas commits and PRs that contain dozens of changes can be harder to revert, and therefore, riskier to merge in the first place.

- Cleaner mental model

-

To work in atomic units, you typically have to break the work down ahead of time. This often produces a cleaner mental model of the problem, and ultimately, better results.

- Easier code reviews

-

Reviewing small, self-contained changes is easy. Reviewing massive PRs with thousands of lines of unrelated changes is hard.

- Lower risk of data loss

-

Doing more frequent commits can be a lifesaver. For example, if you commit (and push) your changes hourly, then if your laptop dies, it’s an annoying, but minor loss, whereas if you commit only once every few days, it’s a huge setback.

- Less risky refactors

-

Committing more frequently gives you the ability to quickly and safely explore new directions. For example, you might try a major refactor, and after many hours of work, realize it’s not working; if you made small commits all along the way, you can revert to any previous point instantly, whereas if you didn’t, you may have to undo your refactor manually, which is can be more time-consuming and error-prone.

- More frequent integration

-

As you’ll see in Part 5, one of the keys to working effectively as a team is to integrate your changes together regularly, and this tends to be easier if everyone is doing small, frequent commits.

|

Get your hands dirty

It’s normal not to get your commits and commit messages quite right initially: often, you don’t know how to break something up into smaller, atomic parts until after you’ve done the whole thing. That’s OK! Learn to use the following Git tools to update your commit history and clean things up before sharing them with your team:

|

Use a code review process

Every page in this book has been checked over by an editor. Why? Because even if you’re the smartest, most capable, most experienced writer, you can’t proofread your own work. You’re too close to the concepts, and you’ve rolled the words around your head for so long you can’t put yourself in the shoes of someone who is hearing them for the first time. Writing code is no different. In fact, if it’s impossible to write prose without independent scrutiny, surely it’s also impossible to write code in isolation; code has to be correct to the minutest detail, plus it includes prose for humans as well!

Making Software: What Really Works, and Why We Believe It by Andy Oram and Greg Wilson (O’Reilly Media)

Having your code reviewed by someone else is a highly effective way to catch bugs, reducing defect rates by as much as 50-80%.[25] Code reviews are also an efficient mechanism to spread knowledge, culture, training, and a sense of ownership throughout the team.

There are different ways of doing code reviews:

- Enforce a pull request workflow

-

One option is to enforce that all changes are done through pull requests (you’ll see a way to do that in the next section), so that the maintainers of each repo can asynchronously review each change before it gets merged.

- Use pair programming

-

Another option is to use pair programming, a development technique where two programmers work together at one computer, with one person as the driver, responsible for writing the code, and the other as the observer, responsible for reviewing the code and thinking about the program at a higher level (the programmers regularly switch roles). The result is a constant code review process. It takes some time to get used to it, but with another developer nearby, you are always focused on how to make it clear what your code is doing, and you have a second set of eyes to catch bugs. Some companies use pair programming for all their coding, while others use it on an as-needed basis (e.g., for really complex tasks or ramping up a new hire).

- Use formal inspections

-

A third option is to schedule a live meeting for a code review where you present the code to multiple developers, and go through it together, line-by-line. You can’t do this degree of scrutiny for every line of code, but for mission critical parts of your systems, this can be a very effective way to catch bugs and get everyone on the same page.

Whatever process you pick for code reviews, you should define your code review guidelines up front, so you have a process that is consistent and repeatable across the entire team: that is, everyone knows what sorts of things to look for (e.g., automated tests), what sorts of things not to look for (e.g., code formatting, which should be automated), and how to communicate feedback effectively. For an example, have a look at Google’s Code Review Guidelines.

Protect your code

For many companies these days, the code you write is your most important asset—your secret sauce. Moreover, it’s also a highly sensitive asset: if someone can slip some malicious code into your codebase, it can be devastating, as that will bypass most other security protections you have in place.

Therefore, you should consider enabling the following security measures to protect your code:

- Signed commits

-

By default, Git allows you to set your name and email address to any value you want, as shown in Example 64:

Example 64. Git allows you to set your username and email to any values you want$ git config user.name "Any name you want" $ git config user.email "Any email you want"Git doesn’t enforce any checking around these values, so you could make commits pretending to be someone else! Fortunately, most VCS hosts (GitHub, GitLab, etc.) allow you to enforce signed commits on your repos, where they reject any commit that doesn’t have a valid cryptographic signature. Typically, the way that it works is you create a GPG key, which consists of a public and private key pair. You store the private key on your own computer, where only you can access it, and you configure Git to use the private key to sign your commits. Next, you upload the public key to your GitHub account, enable GitHub’s commit signature verification, and then GitHub will use this public key to check that any commit that claims to be from you actually has a valid signature, rejecting any commits that don’t.

- Branch protection

-

Most VCS hosts (GitHub, GitLab, etc.) allow you to enable branch protection, where you can enforce certain requirements before code can be pushed to certain branches (e.g.,

main). For example, you could use GitHub’s protected branches to require that all code changes to themainbranch are (a) submitted via pull requests, (b) those pull requests are reviewed by at least N other developers, and (c) certain checks, such as security scans, pass before those pull requests can be merged. This way, even if an attacker compromises one of your developer accounts, they still won’t be able to get code merged intomainwithout other developers seeing it and security scanners checking it.

Now that you know how to use a version control system to help everyone on your team work on the same code, the next step is ensure that you’re all working on that code in the same way: that is, building the code the same way, running the code the same way, using the same dependencies, and so on. This is where build system can come in handy, which is the focus of the next section.

Build System

Most software projects use a build system to automate important operations, such as compiling the code, downloading dependencies, packaging the app for deployment, running automated tests, and so on. The build system serves two audiences: the developers on your team, who run the build steps as part of local development, and various scripts, which run the build steps as part of automating your software delivery process.

|

Key takeaway #2

Use a build system to capture, as code, important operations and knowledge for your project, in a way that can be used both by developers and automated tools. |

The reality is that for most software projects, you can’t not have a build system: either you use an off-the-shelf build system, or you end up creating your own out of ad hoc scripts, duct tape, and glue. I would recommend the former.

There are many off-the-shelf build tools out there. Some were originally designed for use with a specific programming language or framework: for example, Rake for Ruby, Gradle and Maven for Java, SBT for Scala, and NPM for JavaScript (Node.js). There are also some build tools that are largely language agnostic, such as Bazel, and the grandaddy of all build systems, Make.

Usually, the language-specific tools will give you the best experience with that language; I’d only go with the language-agnostic ones like Bazel in specific circumstances, such as massive teams that use dozens of different languages in a single repo (and these days, I wouldn’t recommend Make at all). For the purposes of the blog post series, since the sample app you’ve used in previous parts is JavaScript (Node.js), let’s give NPM a shot, as per the next section.

Example: Configure your Build Using NPM

|

Example Code

As a reminder, you can find all the code examples in the blog post series’s sample code repo in GitHub. |

Let’s set up NPM as the build system for the Node.js sample app you’ve been using throughout the blog post series. Head into the folder you created for running examples in this series, and create a new subfolder for this blog post and the sample app:

$ cd fundamentals-of-devops

$ mkdir -p ch4/sample-app

$ cd ch4/sample-appCopy the app.js file you first saw in Section 1.2.1 into the sample-app folder:

$ cp ../../ch1/sample-app/app.js .Next, make sure you have Node.js installed, which should also install NPM for you. To use

NPM as a build system, you must first configure the build in a package.json file. You can create this file by hand,

or you can let NPM scaffold out an initial version of this file for you by running npm init:

$ npm initNPM will prompt you for information such as the package name, version, description, and so on. You can enter whatever data you want here, or hit ENTER to accept the defaults. When you’re done, you should have a package.json file that looks similar to Example 65:

{

"name": "sample-app",

"version": "1.0.0",

"description": "Sample app for 'Fundamentals of DevOps and Software Delivery'",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

}

}Most of this initial data is metadata about the project. The part to focus on is the scripts object. NPM has a number

of built-in commands, such as npm install, npm start, npm test,

and so on. All of these have default behaviors, but in most cases, you can define what these commands should do by

adding them to the scripts block. For example, npm init gives you an initial test command in the scripts block

that just exits with an error; you’ll change this value later in the blog post, when we discuss

automated testing.

For now, update the scripts block with a start command, which will define how to start your app, as shown in

Example 66:

"scripts": {

"start": "node app.js",

},Now you can use the npm start command to run your app:

$ npm start

> sample-app@1.0.0 start

> node app.js

Listening on port 8080Being able to use npm start over node app.js may not seem like much of a win, but there’s a big difference:

npm start is a well-known convention. Most Node.js and NPM users know to use npm start on a project. Most tools

that work with Node.js know about it as well: e.g., if you had this package.json with a start command back in

Part 1, Fly.io would’ve automatically used it to start the app, without you having to tell it what

command to run.

You might know to run node app.js to start this app, but the other members of your team might not—especially as the

project grows larger, and instead of a single app.js file, there are thousands of source files, and it’s not

obvious which one to run. You could document the command in a README, but there’s a good risk it’ll go out of date.

By capturing this in your build system, which you run regularly, you are capturing this information in a way that is

more likely to be kept up-to-date. And as a bonus, you’re capturing in a way that both other developers (humans) and

other tools (automation) can leverage.

Of course, start isn’t the only command you would add. The idea would be to add all the common operations on your

project to the build. For example, in Section 3.4.1, you created a Dockerfile to package the app as

a Docker image, and in order to build that Docker image for multiple CPU architectures (e.g., ARM64, AMD64), you had to

use a relatively complicated docker buildx command. This is a great thing to capture in your build.

First, copy the Dockerfile shown in Example 67 into the sample-app folder:

FROM node:21.7

WORKDIR /home/node/app

(1)

COPY package.json .

COPY app.js .

EXPOSE 8080

USER node

(2)

CMD ["npm", "start"]This is identical to the Dockerfile you saw in Section 3.4.1, except for two changes:

| 1 | Copy the package.json file into the Docker image, in addition to app.js. |

| 2 | Use npm start to start the app, rather than hard-code node app.js. This way, if you ever change how you run the

app—which you will later in this blog post—the only thing you’ll need to update is package.json. |

Second, create a script called build-docker-image.sh with the contents shown in Example 68:

#!/usr/bin/env bash

set -e

(1)

name=$(npm pkg get name | tr -d '"')

version=$(npm pkg get version | tr -d '"')

(2)

docker buildx build \

--platform=linux/amd64,linux/arm64 \

--load \

-t "$name:$version" \

.This script does the following:

| 1 | Use npm pkg get to read the values of name and version from package.json. This ensures

package.json is the single location where you manage the name and version of your app. |

| 2 | Run the same docker buildx command as before, setting the Docker image name and version to

the values from (1). |

|

You must enable containerd for images

In order to use the |

Next, make the script executable:

$ chmod u+x build-docker-image.shFinally, add a dockerize command to the scripts block in package.json that executes build-docker-image.sh, as

shown in Example 69:

dockerize command to the scripts block (ch4/sample-app/package.json) "scripts": {

"start": "node app.js",

"dockerize": "./build-docker-image.sh",

},Now, instead of trying to figure out a long, esoteric docker buildx command, members of your team can execute

npm run dockerize (note that you need npm run dockerize and not just npm dockerize as dockerize is custom

command, and not one of the ones built-into NPM):

$ npm run dockerize

> sample-app@v4 dockerize

> docker buildx build --platform=linux/amd64,linux/arm64 -t sample-app:$(npm pkg get version | tr -d '"') .

[+] Building 0.5s (14/14) FINISHED

=> [internal] load build definition from Dockerfile

(... truncated ...)

=> [linux/amd64 4/4] COPY app.js .

=> [linux/arm64 4/4] COPY app.js .Now that you know how to use a build system to automate parts of your workflow, let’s talk about another key responsibility of most built systems: managing dependencies.

Dependency Management

Most software projects these days rely on a large number of dependencies: that is, other software packages and libraries that your code uses. There are many kinds of dependencies:

- Code in the same repo

-

You may choose to break up the code in a single repo into multiple modules or packages (depending on the programming language you’re using), and to have these modules depend on each other. This lets you develop different parts of your codebase in isolation from the others, possibly with completely separate teams working on each part.

- Code in different repos

-

Your company may store code across multiple repos. This gives you even more isolation between the different parts of your software and makes it even easier for separate teams to take ownership for each part. Typically, when repo A depends on code in repo B, you depend on a specific version of that code. This version may correspond to a specific Git tag, or it could depend on a versioned artifact published from that repo: e.g., a Jar file in the Java world or a Ruby Gem in the Ruby world.

- Open source code

-

Perhaps the most common type of dependency these days is a dependency on open source code. The 2024 Open Source Security and Risk Analysis Report by Synopsis found that 96% of codebases rely on open source and that 70% of all the code in those codebases originates from open source! The open source code almost always lives in separate repos, so again, you’ll typically depend on a specific version of that code.

Whatever type of dependency you have, there’s a common theme here: you use a dependency so that you can rely on someone else to solve certain problems for you, instead of having to solve everything yourself from scratch (and maintain it all). If you want to maximize that leverage, make sure to never copy & paste dependencies into your codebase. If you copy & paste a dependency, you run into a variety of problems:

- Transitive dependencies

-

Copy/pasting a single dependency is easy, but if that dependency has its own dependencies, and those dependencies have their own dependencies, and so on (collectively known as transitive dependencies), then copy/pasting becomes rather hard.

- Licensing

-

Copy/pasting may violate the license terms of that dependency, especially if you end up modifying that code because it now sits in your own repo. Be especially aware of dependencies that uses GPL-style licenses (known as copyleft or viral licenses), for if you modify the code in those dependencies, that may trigger a clause in the license that requires you to release your own code under the same license (i.e., you’ll be forced to open source your company’s proprietary code!).

- Staying up to date

-

If you copy/paste the code, to get any future updates, you’ll have to copy/paste new code, and new transitive dependencies, and make sure you don’t lose any changes your team members made along the way.

- Private APIs

-

You may end up using private APIs (since you can access those files locally) instead of the public ones that were actually designed to be used, which can lead to unexpected behavior, and make staying up to date even harder.

- Bloating your repo

-

Every dependency you copy/paste into your version control system makes it larger and slower.

The proper way to use dependencies is with a dependency management tool. Most build systems have dependency management tools built in, and the way they typically work is you define your dependencies as code, in the build configuration, including the version of the dependency you’re using, and the dependency management tool is then responsible for downloading that dependency, plus any transitive dependencies, and making it available to your code.

|

Key takeaway #3

Use a dependency management tool to pull in dependencies—not copy & paste. |

Let’s try out an example with the Node.js sample app and NPM.

Example: Add Dependencies in NPM

So far, the Node.js sample app you’ve been using has not had any dependencies other than the http standard library

built into Node.js itself. Although you can build web apps this way, the more common approach is to use some sort of

web framework. For example, Express is a popular web framework for Node.js. To use it,

you can run:

$ npm install express --saveIf you look into package.json, you will now have a new dependencies section, as shown in

Example 70:

"dependencies": {

"express": "^4.19.2"

}You should also see two other changes in the folder with the package.json file:

- node_modules

-

This is a scratch directory where NPM downloads dependencies. This folder should be in your .gitignore file so you do not check it into version control. Instead, whenever anyone checks out the repo the first time, they can run

npm install, and NPM will install all the dependencies they need automatically. - package-lock.json

-

This is a dependency lock file: it captures the exact dependencies that were installed. This is useful because in package.json, you can specify a version range instead of a specific version to install. For example, you may have noticed that

npm installset the version of Express to^4.19.2. Note the caret (^) at the front: this is called a caret version range, and it allows NPM to install any version of Express at or above 4.19.2 (so 4.20.0 would be OK, but not 5.0.0). So every time you runnpm install, you may get a new version of Express. This can be a good thing, as you may be picking up bug fixes or security patches. But it can also be frustrating in an automated environment, as your builds may not be reproducible. With a lock file, you can use thenpm cicommand instead ofnpm installto tell NPM to install the exact versions in the lock file, so the build output is exactly the same every time. You’ll see an example of this shortly.

Now that Express is installed, you can rewrite the code in app.js to use the Express framework, as shown in Example 71:

const express = require('express');

const app = express();

const port = 8080;

app.get('/', (req, res) => {

res.send('Hello, World!');

});

app.listen(port, () => {

console.log(`Example app listening on port ${port}`);

});This app listens on port 8080 and responds with "Hello, World!" just as before, but because you’re using a framework, it’ll be a lot easier to evolve this code into a real app by leveraging all the features built into Express: e.g., routing, templating, error-handling, middleware, security, and so on.

There’s one more thing you need to do now that the app has dependencies: you need to update the Dockerfile to install dependencies, as shown in Example 72:

npm install (ch4/sample-app/Dockerfile)FROM node:21.7

WORKDIR /home/node/app

(1)

COPY package.json .

COPY package-lock.json .

(2)

RUN npm ci --only=production

COPY app.js .

EXPOSE 8080

USER node

CMD ["npm", "start"]You need to make two changes to the Dockerfile:

| 1 | Copy not only package.json, but also package-lock.json into the Docker image. |

| 2 | Run npm ci, which, as mentioned earlier, is very similar to npm install, except it’s designed to do a

clean install, where it installs the exact dependencies in the lock file, without any changes. This is meant to

be used in automated environments, where you want repeatable builds. Note also the use of the --only=production

flag to tell NPM to only install the production dependencies. You can have a devDependencies block on

package.json where you define dependencies only used in the dev environment (e.g., tooling for automated testing,

as you’ll see in the next section), and there’s no need to bloat the Docker image with these dependencies. |

You can now try running npm start to check that the app runs with its use of the Express framework as a dependency,

or npm run dockerize to check that the Docker image still builds correctly.

|

Get your hands dirty

To avoid introducing too many new tools, I used NPM as a build system in this blog post, as it comes natively with Node.js. However, for production use cases, you may want to try out one of these more modern and robust build systems: |

Now that you’ve seen how a version control system allows your team members to work on the same code, and a build system allows your team members to work the same way, let’s turn our attention to one of the most important tools for allowing your team members to get work done quickly: automated tests.

Automated Testing

Programming can be scary. One of the underappreciated costs of technical debt is the psychological impact it has on developers. There are thousands of programmers out there who are scared of doing their jobs. Perhaps you’re one of them.

You get a bug report at three in the morning and after digging around, you isolate it to a tangled mess of spaghetti code. There is no documentation. The developer who originally wrote the code no longer works at the company. You don’t understand what the code is doing. You don’t know all the places it gets used. What you have here is legacy code.

Legacy code. The phrase strikes disgust in the hearts of programmers. It conjures images of slogging through a murky swamp of tangled undergrowth with leaches beneath and stinging flies above. It conjures odors of murk, slime, stagnancy, and offal. Although our first joy of programming may have been intense, the misery of dealing with legacy code is often sufficient to extinguish that flame.

Working Effectively with Legacy Code (Pearson)

Legacy code is scary. You’re afraid because you still have scars from the time you tried to fix one bug only to reveal three more; the "trivial" change that took two months; the tiny performance tweak that brought the whole system down and pissed off all of your coworkers. So now you’re afraid of your own codebase. That’s a tough place to be.

Fortunately, there is a solution: automated testing, where you write test code to validate that your production code works the way you expect it to. The main reason to write automated tests is not that tests prove the correctness of your code (they don’t) or that you’ll catch all bugs with tests (you won’t), but that a good suite of automated tests gives you the confidence to make changes quickly.

The key word is confidence: tests provide a psychological benefit as much as a technical one. If you have a good test suite, you don’t have to keep the state of the whole program in your head. You don’t have to worry about breaking other people’s code. You don’t have to repeat the same boring, error-prone manual testing over and over again. You just run a single test command and get rapid feedback on whether things are working.

|

Key takeaway #4

Use automated tests to give your team the confidence to make changes quickly. |

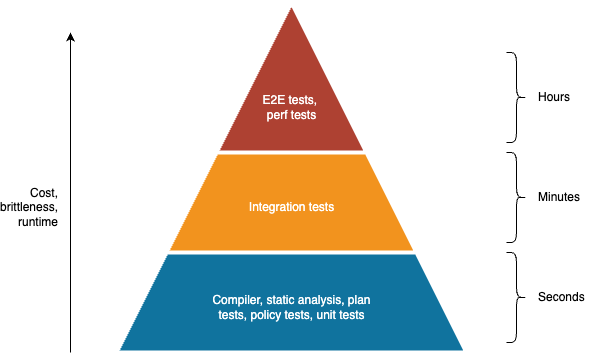

There are many types of automated tests:

- Compiler

-

If you’re using a statically-typed language (e.g., Java, Scala, Haskell, Go, TypeScript), you can compile the code to automatically identify (a) syntactic issues and (b) type errors. If you’re using a dynamically-typed language (e.g., Ruby, Python, JavaScript), you can pass the code through the interpreter to identify syntactic issues.

- Static analysis / linting

-

These are tools that read and check your code "statically"—that is, without executing it—to automatically identify potential issues. Examples: ShellCheck for Bash, ESLint for JavaScript, SpotBugs for Java, RuboCop for Ruby.

- Policy tests

-

In the last few years, policy as code tools have become more popular as a way to define and enforce company policies and legal regulations in code. Examples: Open Policy Agent, Sentinel, Intercept. Many of these tools are based on static analysis, except they give you flexible languages to define what sorts of rules you want to check. Some rely on plan testing, as described next.

- Plan tests

-

Whereas static analysis is a way to test your code without executing it at all, plan testing is a way to partially execute your code. This typically only applies to tools that can generate an execution plan without actually executing the code. For example, OpenTofu has a

plancommand that shows you what changes the code would make to your infrastructure without actually making those changes: so in effect, you are running all the read operations of your code, but none of the write operations. You can write automated tests against this sort of plan output using tools such as Open Policy Agent and Terratest. - Unit tests

-

This is the first of the test types that fully execute your code to test it. The idea with unit tests is to execute only a single "unit" of your code: what a unit is depends on the programming language, but it’s typically a small part of the code, such as one function or one class. You typically mock any dependencies outside of that unit (e.g., databases, other services, the file system), so that the test solely executes the unit in question. Some programming languages have unit testing tools built in, such as testing for Go and unittest for Python, whereas other languages rely on 3rd party tools for unit testing, such as JUnit for Java and Jest for JavaScript.

- Integration tests

-

Just because you’ve tested a unit in isolation and it works, doesn’t mean that multiple units will work when you put them together. That’s where integration testing comes in. Here, you test multiple units of your code (e.g., multiple functions or classes), often with a mix of real dependencies (e.g., a database) and mocked dependencies (e.g., a mock remote service).

- End-to-end (E2E) tests

-

End-to-end tests verify that your entire product works as a whole: that is, your run your app, all the other services you rely on, all your databases and caches, and so on, and test them all together. These often overlap with the idea of acceptance tests, which verify your product works from the perspective of the user or customer ("does the product solve the problem the user cares about").

- Performance tests

-

Most unit, integration, and E2E tests verify the correctness of a system under ideal conditions: one user, low system load, and no failures. Performance tests verify the stability and responsiveness of a system in the face of heavy load and failures.

Automated tests are how you fight your fear. They are how you fight legacy code. In fact, in the same book I quoted earlier, Michael Feathers writes, "to me, legacy code is simply code without tests." I don’t know about you, but I don’t want to add more legacy code to this world, so that means it’s time to write some tests!

Example: Add Automated Tests for the Node.js App

Consider the Node.js sample app you’ve been using in this blog post, as shown in Example 73:

const express = require('express');

const app = express();

const port = 8080;

app.get('/', (req, res) => {

res.send('Hello, World!');

});

app.listen(port, () => {

console.log(`Example app listening on port ${port}`);

});Stop for a second and ask yourself, how do you know this code actually works? So far, the way you’ve answered that

question is through manual testing, where you manually ran the app with npm start and checked URLs in your browser.

This works fine for a tiny, simple app, but once the app grows larger (hundreds of URLs to check) and your team grows

larger (hundreds of developers making changes), manual testing will become too time-consuming and error-prone.

The idea with automated testing is to write code that performs the testing steps for you, taking advantage of the fact that computers can perform these checks far faster and more reliably than a person. To get a feel for automated testing, let’s start with a simple example: a unit test.

Add unit tests for the Node.js App

To create a unit test, you need a unit of code. Let’s create a basic one just for learning in a new file called reverse.js with the contents shown in Example 74:

function reverseWords(str) {

return str

.split(' ')

.reverse()

.join(' ');

}

function reverseCharacters(str) {

return str

.split('')

.reverse()

.join('');

}

module.exports = {reverseCharacters, reverseWords};This code contains two functions:

-

reverseWordsreverses the words in a string. For example, if you pass in "hello world", you should get back "world hello". -

reverseCharactersreverses the characters in a string. For example, if you pass in "abcd", you should get back "dcba".

Let me ask the same question again: how do you know this code actually works? I suppose you could stare at the code for a while, simulate what it does in your head, and declare it as either working or not. But if you’ve ever written code, you’ve written buggy code, so you know that relying on the simulator in your head isn’t enough. You’ve got to test the code.

One option is to test the code manually. For example, you could fire up a REPL (read-eval-print-loop), which is an

interactive shell where you can manually execute code. For Node.js, you can get a REPL by running the node executable

with no arguments:

$ node

Welcome to Node.js v21.7.1.

Type ".help" for more information.

>You can now type code into this REPL, hit ENTER, and Node.js will execute the and show you the result:

> 2 + 2 4

You could use this to test the reverseWords function as follows:

> const reverse = require('./reverse')

undefined

> reverse.reverseWords('hello world')

'world hello'

(When you’re done experimenting with the REPL, use Ctrl+D to exit).

Hey, that seems to work! But this is hardly a thorough test, and doing this manually every time is time-consuming and error-prone. So let’s capture the steps you did in a REPL in an automated test.

First, you need to install some testing libraries: you’ll use Jest as a testing framework and, a

little later on, SuperTest as a library for testing HTTP apps. Use

npm install to add these dependencies, but this time, use the --save-dev flag to save them as dev dependencies;

this way, they’ll be available during development (where you run tests), but won’t be packaged into your app for

production deployments (where you don’t run tests):

$ npm install --save-dev jest supertestThis will update package.json with a new devDependencies section, as shown in Example 75:

{

"dependencies": {

"express": "^4.19.2"

},

"devDependencies": {

"jest": "^29.7.0",

"supertest": "^7.0.0"

}

}Next, update the test command in package.json to run Jest, as shown in Example 76:

"scripts": {

"test": "jest --verbose"

}Now you can start writing tests. Create a file called reverse.test.js with the contents shown in Example 77:

reverseWords function (ch4/sample-app/reverse.test.js)const reverse = require('./reverse');

describe('test reverseWords', () => { (1)

test('hello world => world hello', () => { (2)

const result = reverse.reverseWords('hello world'); (3)

expect(result).toBe('world hello'); (4)

});

});Here’s how this code works:

| 1 | You use the describe function to group several tests together. The first argument to describe is the

description of this group of tests and the second argument is a function that will run the tests for this group. |

| 2 | You use the test function to define individual tests. The first argument is the description of the test and the

second argument is the function that will run the test. |

| 3 | Call the reverseWords function and store the result in the variable result, just as you did in the REPL. |

| 4 | Use the expect matcher to check that the result matches "world hello". If it doesn’t match, fail the test. |

Use npm test to run this test:

$ npm test

> sample-app@v4 test

> jest --verbose

PASS ./reverse.test.js

test reverseWords

✓ hello world => world hello (1 ms)

Test Suites: 1 passed, 1 total

Tests: 1 passed, 1 total

Snapshots: 0 total

Time: 0.192 s, estimated 1 s

Ran all test suites.The test passed! And it took all of 0.192 seconds, which is a whole lot faster than manually typing in a REPL.

Add a second test for reverseWords as shown in Example 78:

reverseWords function (ch4/sample-app/reverse.test.js) test('trailing whitespace => whitespace trailing', () => {

const result = reverse.reverseWords('trailing whitespace ');

expect(result).toBe('whitespace trailing');

});Re-run npm test:

$ npm test

FAIL ./reverse.test.js

test reverseWords

✓ hello world => world hello (1 ms)

✕ trailing whitespace => whitespace trailing (1 ms)

● test reverseWords › trailing whitespace => whitespace trailing

expect(received).toBe(expected) // Object.is equality

Expected: "whitespace trailing"

Received: " whitespace trailing"

17 | test('trailing whitespace => whitespace trailing', () => {

18 | const result = reverse.reverseWords('trailing whitespace ');

> 19 | expect(result).toBe('whitespace trailing');

| ^

20 | });

at Object.toBe (reverse.test.js:19:20)Oops. Looks like the code has a bug after all: it doesn’t handle trailing whitespace correctly. A powerful benefit of automated testing is that you can create tests for all the various corner cases and find out if your code really does what you expect. The fix for this issue shown in Example 79:

reverseWords (ch4/sample-app/reverse.js)function reverseWords(str) {

return str

.trim() (1)

.split(' ')

.reverse()

.join(' ');

}| 1 | The fix is to use the trim function to strip leading and trailing whitespace. |

How can you be sure that this fix works? Re-run the tests!

$ npm test

PASS ./reverse.test.js

test reverseWords

✓ hello world => world hello (1 ms)

✓ trailing whitespace => whitespace trailingThis is a good example of the typical way you write code when you have a good suite of automated test to lean on: you make a change, you re-run the tests, you make another change, you rerun the tests again, and so on, adding new tests as necessary. With each iteration, your test suite gradually improves, you build more and more confidence in your code, and you can go faster and faster.

|

Key takeaway #5

Automated testing makes you more productive while coding by providing a rapid feedback loop: make a change, run the tests, make another change, re-run the tests, and so on. |

Rapid feedback loops are a big part of the DevOps methodology, and a big part of being more productive as a programmer. And this not only helps you fix the bug with whitespace faster, but now you also have a regression test in place that will prevent that bug from coming back. This is a massive boost to productivity that often gets overlooked.

|

Key takeaway #6

Automated testing makes you more productive in the future, too: you save a huge amount of time not having to fix bugs because the tests prevented those bugs from slipping through in the first place. |

Using code coverage tools to improve unit tests

A useful feature available with some automated testing tools is the ability to measure code coverage: that is, what

percent of your code got executed by your tests. If your tests are only executing 10% of your code, then how much

confidence can you have that your code really works, and that there aren’t bugs hiding in the other 90%? To have Jest

measure code coverage, add the --coverage flag to the test command in package.json, as shown in

Example 80:

"test": "jest --verbose --coverage"Run the tests one more time:

$ npm test

PASS ./reverse.test.js

test reverseWords

✓ hello world => world hello (1 ms)

✓ trailing whitespace => whitespace trailing

------------|---------|----------|---------|---------|-------------------

File | % Stmts | % Branch | % Funcs | % Lines | Uncovered Line #s

------------|---------|----------|---------|---------|-------------------

All files | 66.66 | 100 | 50 | 66.66 |

reverse.js | 66.66 | 100 | 50 | 66.66 | 22

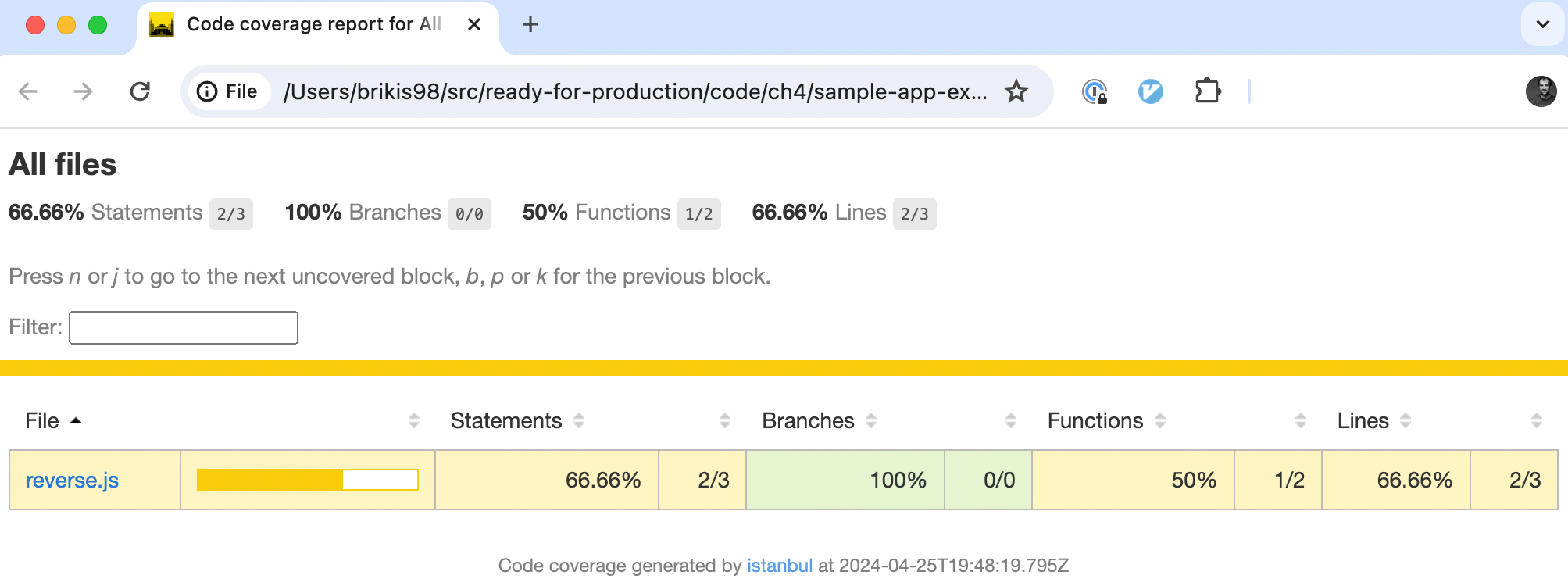

------------|---------|----------|---------|---------|-------------------You now get a table at the end of the output that shows each file and what percentage of the code was executed during the automated tests. You should also see a new coverage folder that contains even more information, including some HTML reports within coverage/lcov-report//fundamentals-of-devops. Try opening this file in a browser:

$ open coverage/lcov-report//fundamentals-of-devopsYou should see a page that looks similar to Figure 38:

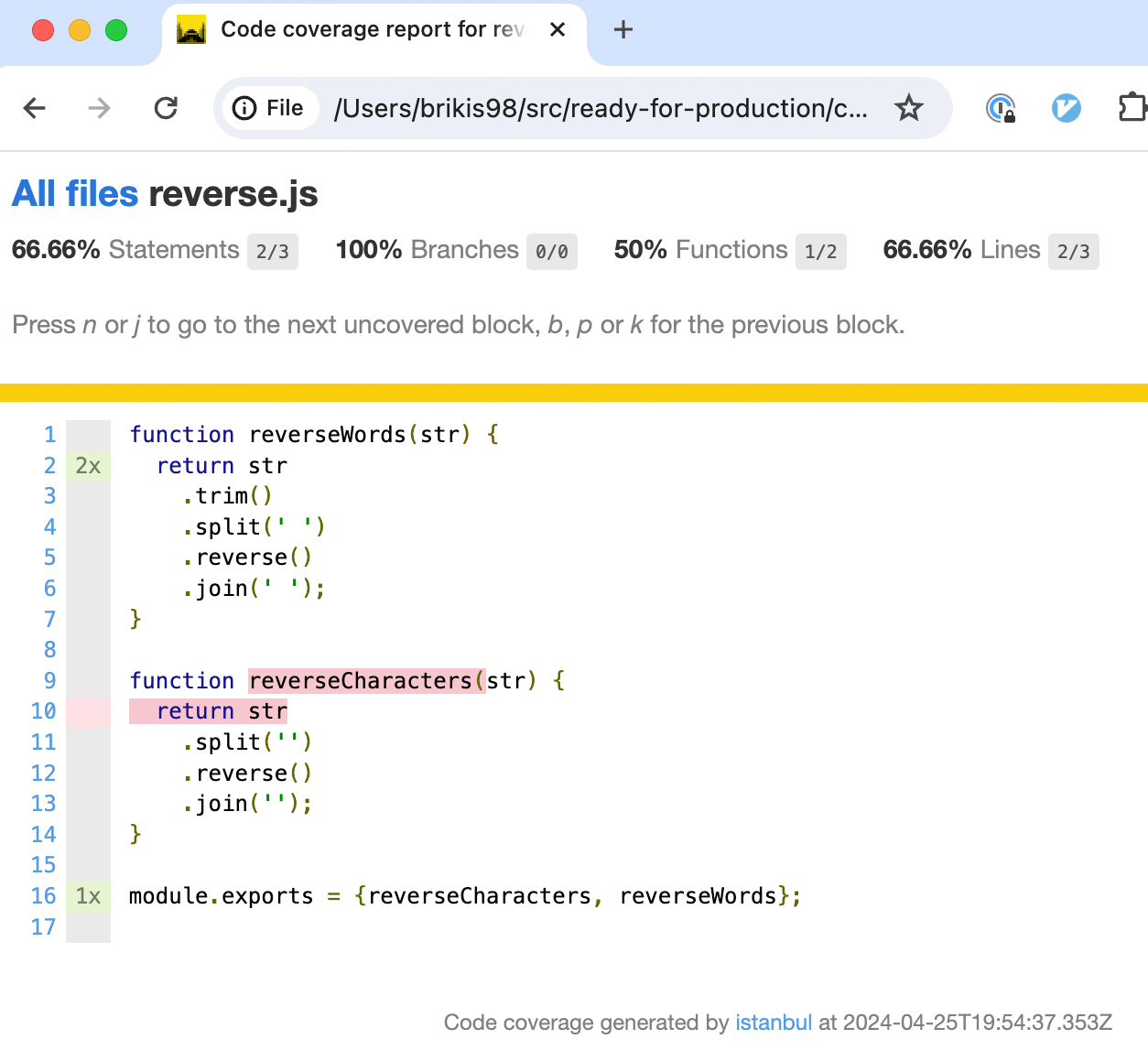

As you can see, you’re only testing about two-thirds of your code. To get more details into what you missed, click on reverse.js, and you should see something similar to Figure 39:

This page shows you your own code: the green "2x" and "1x" indicate those parts of the code were executed during

testing twice or once, respectively. The highlighted code in red tells you code that wasn’t executed at all: of course,

there are no tests for the reverseCharacters function!

Now that code coverage has helped you see where your tests are lacking, head into reverse.test.js, and add a new unit

test for reverseCharacters, as shown in Example 81:

reverseCharacters (ch4/sample-app/reverse.test.js)describe('test reverseCharacters', () => {

test('abcd => dcba', () => {

const result = reverse.reverseCharacters('abcd');

expect(result).toBe('dcba');

});

});Run the tests one more time:

$ npm test

PASS ./reverse.test.js

test reverseWords

✓ hello world => world hello (1 ms)

✓ trailing whitespace => whitespace trailing (1 ms)

test reverseCharacters

✓ abcd => dcba

------------|---------|----------|---------|---------|-------------------

File | % Stmts | % Branch | % Funcs | % Lines | Uncovered Line #s

------------|---------|----------|---------|---------|-------------------

All files | 100 | 100 | 100 | 100 |

reverse.js | 100 | 100 | 100 | 100 |

------------|---------|----------|---------|---------|-------------------And there you go, all tests are passing, and code coverage is now at 100%.

Add end-to-end tests for the Node.js App

Now that you have a feel for unit testing, let’s go back to the original problem of testing app.js, and add an end-to-end test for the app: that is, a test that makes an HTTP request to the app, and checks the response. To do that, you first need to split out the part of app.js that listens on a port into a separate file called server.js. That way, you’ll be able to run multiple copies of your app concurrently during automated testing without getting errors due to trying to listen on the same port.

First, update app.js to solely configure the Express app, and to export it, as shown in Example 82:

const express = require('express');

const app = express();

app.get('/', (req, res) => {

res.send('Hello, World!');

});

module.exports = app;Next, create a new file called server.js that imports the code from app.js and has it listen on a port, as shown in Example 83:

const app = require('./app');

const port = 8080;

app.listen(port, () => {

console.log(`Example app listening on port ${port}`);

});Make sure to update the start command in package.json to now use server.js instead of app.js, as shown in

Example 84:

"start": "node server.js",You should also update the Dockerfile to copy not only app.js, but server.js as well, as shown in

Example 85:

.js files (ch4/sample-app/Dockerfile)

# ... (earlier lines omitted) ...

RUN npm ci --only=production

(1)

COPY *.js .

EXPOSE 8080

# ... (later lines omitted) ...| 1 | Copy all .js files from the current folder into the Docker image. |

Now, finally, you can add a test for the app in a new file called app.test.js, as shown in Example 86:

const request = require('supertest');

const app = require('./app'); (1)

describe('Test the app', () => {

test('Get / should return Hello, World!', async () => {

const response = await request(app).get('/'); (2)

expect(response.statusCode).toBe(200); (3)

expect(response.text).toBe('Hello, World!'); (4)

});

});Here’s how this test works:

| 1 | Import the app code from app.js. |

| 2 | Use the SuperTest library (imported under the name request) to fire up the app and make an HTTP GET request to

it at the "/" URL. |

| 3 | Check that the response status code from the app is a 200 OK. |

| 4 | Check that the response body from the app is the text "Hello, World!" |

Re-run the tests one more time:

$ npm test

PASS ./app.test.js

Test the app

✓ Get / should return Hello, World! (14 ms)

PASS ./reverse.test.js

test reverseWords

✓ hello world => world hello

✓ trailing whitespace => whitespace trailing

test reverseCharacters

✓ abcd => dcba (1 ms)

------------|---------|----------|---------|---------|-------------------